Table of Contents |

guest 2025-07-16 |

Tutorial: Hello World Module

Map of Module Files

Example Modules

Modules: Queries, Views and Reports

Module Directories Setup

Module Query Views

Module SQL Queries

Module R Reports

Module HTML and Web Parts

Modules: JavaScript Libraries

Modules: Assay Types

Tutorial: Define an Assay Type in a Module

Assay Custom Domains

Assay Custom Views

Example Assay JavaScript Objects

Assay Query Metadata

Customize Batch Save Behavior

SQL Scripts for Module-Based Assays

Transformation Scripts

Example Workflow: Develop a Transformation Script (perl)

Example Transformation Scripts (perl)

Transformation Scripts in R

Transformation Scripts in Java

Transformation Scripts for Module-based Assays

Run Properties Reference

Transformation Script Substitution Syntax

Warnings in Tranformation Scripts

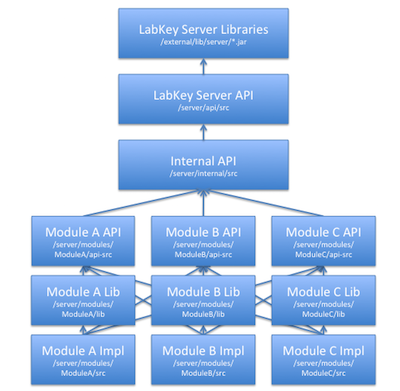

Modules: ETLs

Tutorial: Extract-Transform-Load (ETL)

ETL Tutorial: Set Up

ETL Tutorial: Run an ETL Process

ETL Tutorial: Create a New ETL Process

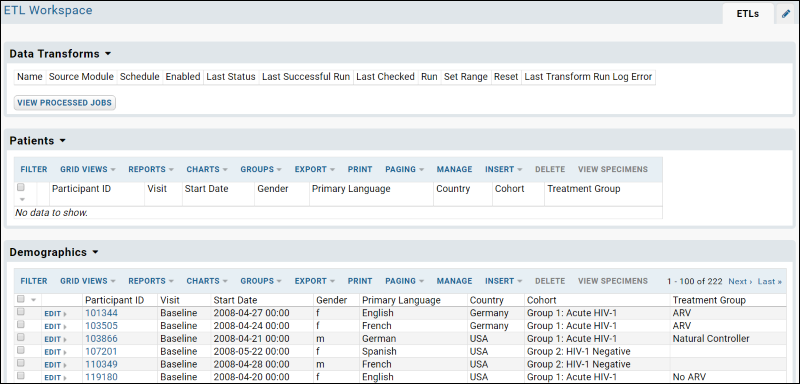

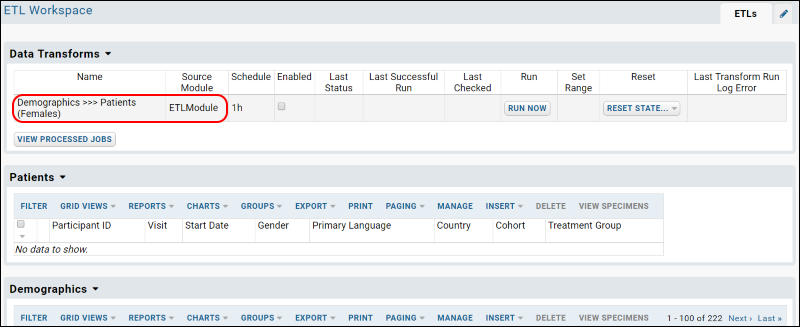

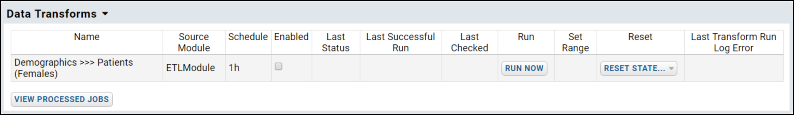

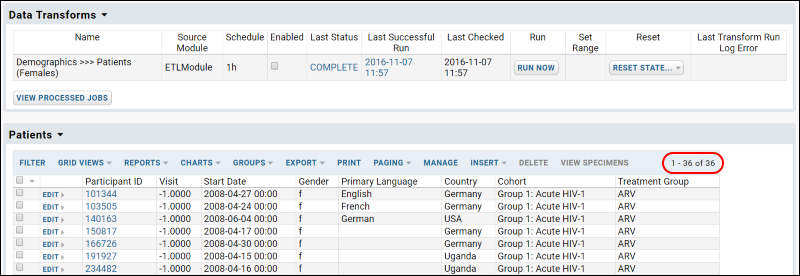

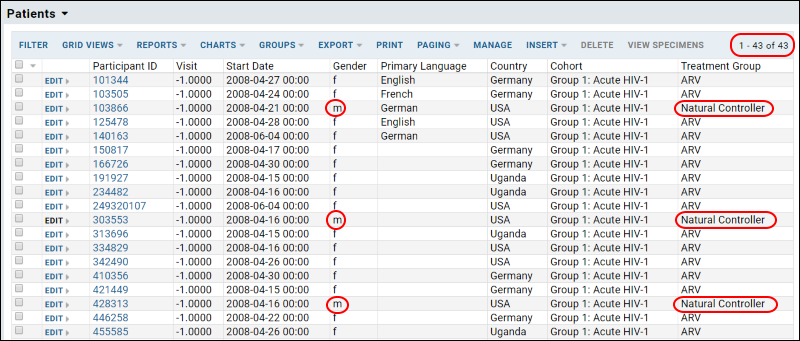

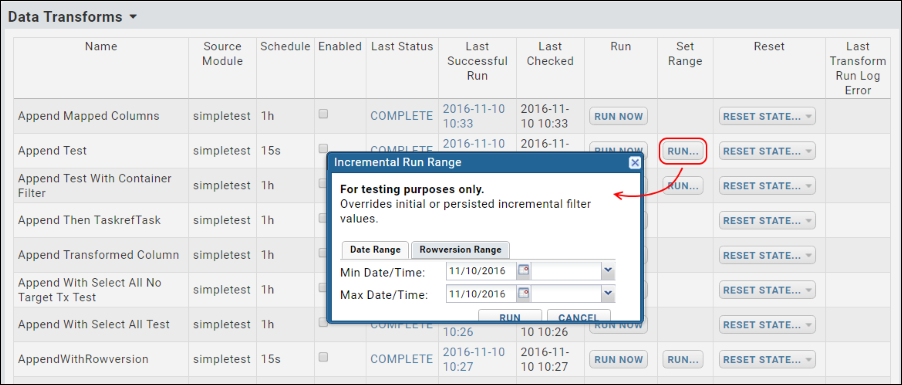

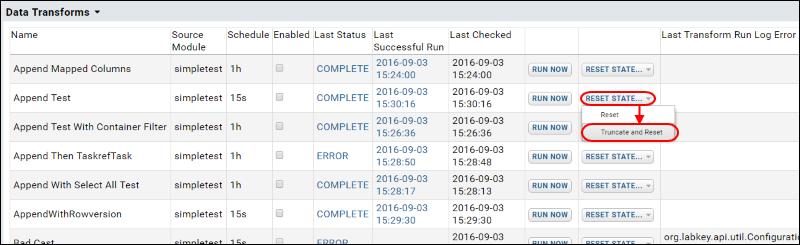

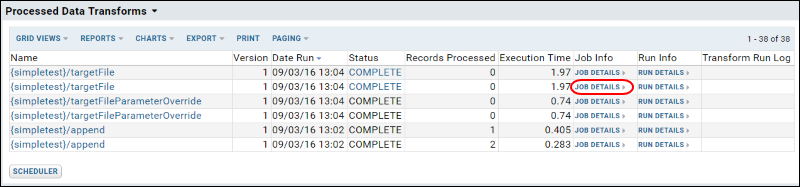

ETL: User Interface

ETL: Configuration and Schedules

ETL: Column Mapping

ETL: Queuing ETL Processes

ETL: Stored Procedures

ETL: Stored Procedures in MS SQL Server

ETL: Functions in PostgreSQL

ETL: Check For Work From a Stored Procedure

ETL: SQL Scripts

ETL: Remote Connections

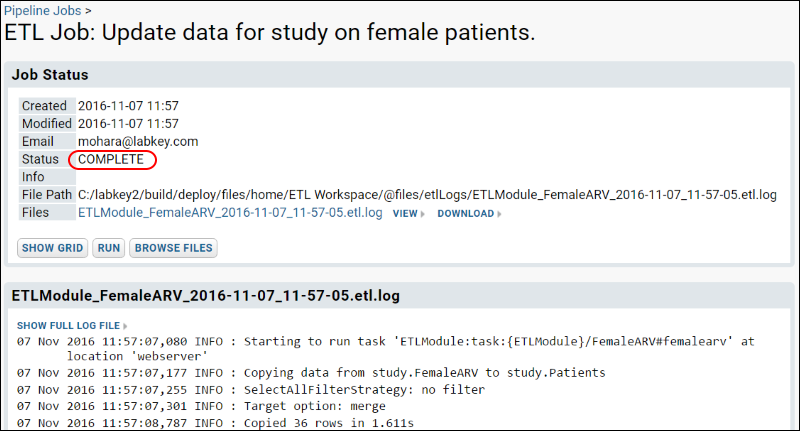

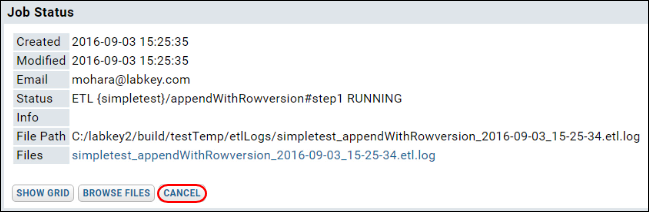

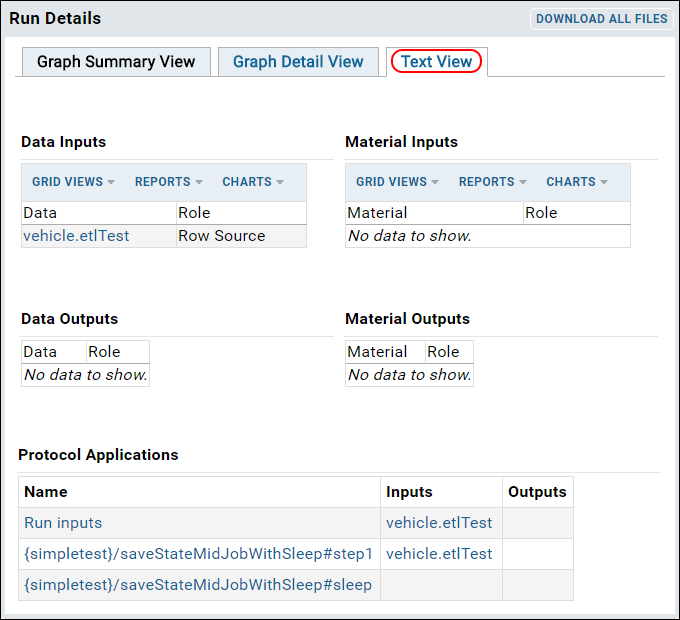

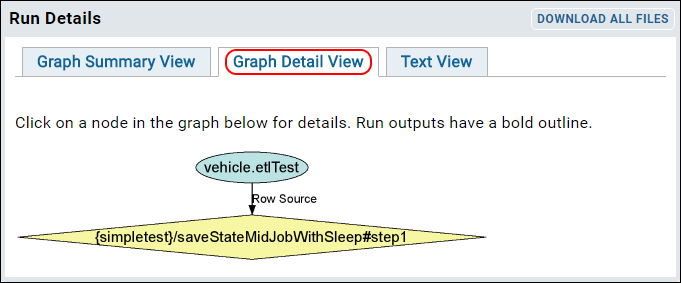

ETL: Logs and Error Handling

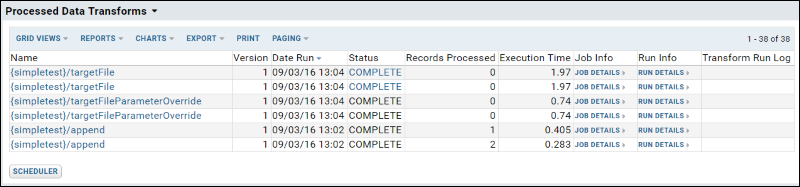

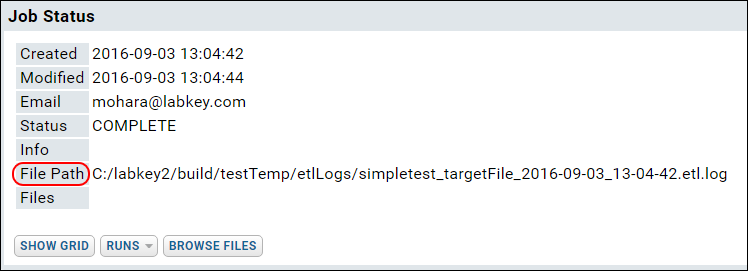

ETL: All Jobs History

ETL: Examples

ETL: Reference

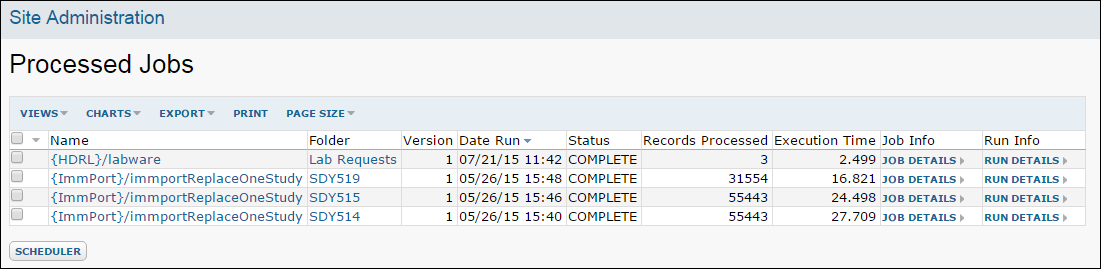

Modules: Java

Module Architecture

Getting Started with the Demo Module

Creating a New Java Module

The LabKey Server Container

Implementing Actions and Views

Implementing API Actions

Integrating with the Pipeline Module

Integrating with the Experiment Module

Using SQL in Java Modules

GWT Integration

GWT Remote Services

Java Testing Tips

HotSwapping Java classes

Deprecated Components

Modules: Folder Types

Modules: Query Metadata

Modules: Report Metadata

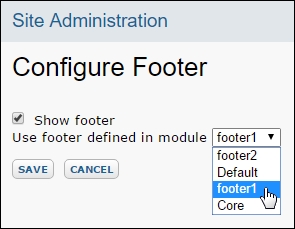

Modules: Custom Footer

Modules: SQL Scripts

Modules: Database Transition Scripts

Modules: Domain Templates

Deploy Modules to a Production Server

Upgrade Modules

Main Credits Page

Module Properties Reference

Develop Modules

A wide variety of resources can be used, including, R reports, SQL queries and scripts, API-driven HTML pages, CSS, JavaScript, images, custom web parts, XML assay definitions, and compiled Java code. Much module development can be accomplished without compiling Java code, letting you directly deploy and test module source, oftentimes without restarting the server.

Module Functionality

| Functionalityoooooooo | Description | Docsooooooooooooooooooooooooooo |

|---|---|---|

| Queries, Views, and Reports | A module that includes queries, reports, and/or views directories. Create file-based SQL queries, reports, views, web parts, and HTML/JavaScript client-side applications. No Java code required, though you can easily evolve your work into a Java module if needed. | Modules: Queries, Views and Reports |

| Assay | A module with an assay directory included, for defining a new assay type. | Modules: Assay Types |

| Extract-Transform-Load | A module with an etl directory included, for configuring data transfer and synchronization between databases. | Modules: ETLs |

| Script Pipeline | A module with a pipeline directory included, for running scripts in sequence, including R scripts, JavaScript, Perl, Python, etc. | Script Pipeline: Running R and Other Scripts in Sequence |

| Java | A module with a Java src directory included. Develop Java-based applications to create server-side code. | Modules: Java |

Do I Need to Compile Modules?

Modules do not need to be compiled, unless they contain Java code. Most module functionality can be accomplished without the need for Java code, including "CRUD" applications (Create-Retrieve-Update-Delete applications) that provide views and reports on data on the server, and provide some way for users to interact with the data. These applications will typically use some combination of the following client APIs: LABKEY.Query.selectRows, insertRows, updateRows, and deleteRows.

Also note that client-side APIs are generally guaranteed to be stable, while server-side APIs are not guaranteed to be stable and are liable to change as the LabKey Server code base evolves -- so modules based on the server API may require changes to keep them up to date.

More advanced client functionality, such as defining new assay types, working with the security API, and manipulating studies, can also be accomplished with a simple module without Java.

To create your own server actions (i.e., code that runs on the server, not in the client), Java is generally required. Trigger scripts, which run on the server, are are an exception: trigger scripts are a powerful feature, sufficient in many cases to avoid the need for Java code. Note that Java modules require a build/compile step, but modules without Java code don't need to be compiled before deployment to the server.

Module Development Setup

Use the following topic to set up a development machine for building LabKey modules: Set up a Development Machine

Topics

The topics below show you how to create a module, how to develop the various resources within the module, and how to package and deploy it to LabKey Server.

- Map of Module Files - An overview of the features and file types you can include in modules.

- Example Modules - Representative example modules from the LabKey Server open source project.

- Modules: Queries, Views and Reports - Build a more complex file-based module.

- Modules: Assay Types - Module-based custom assay types, to base assay designs on.

- Modules: ETLs - Module-based Extract-Transform-Load functionality.

- Modules: Java - Including Java in modules.

- Modules: Folder Types - Module-based custom folder types.

- Modules: SQL Scripts - Running SQL scripts on module load.

- Deploy Modules to a Production Server - Deploying modules for production.

- Upgrade Modules - How to handle module upgrades.

- MiniProfiler - Developer tool shows which queries were run on a page, and performance information for each query.

- Main Credits Page - Include a credits page.

- Module Properties Reference - Reference for module.properties/module.xml files.

Tutorial: Hello World Module

LabKey Server's functionality is packaged inside of modules. For example, the query module handles the communication with the databases, the wiki module renders Wiki/HTML pages in the browser, the assay module captures and manages assay data, etc.

You can extend the functionality of the server by adding your own module. Here is a partial list of things you can do with a module:

- Create a new assay type to capture data from a new instrument.

- Add a new set of tables and relationships (= a schema) to the database by running a SQL script.

- Develop file-based SQL queries, R reports, and HTML views.

- Build a sequence of scripts that process data and finally insert it into the database.

- Define novel folder types and web part layouts.

- Set up Extract-Transform-Load (ETL) processes to move data between databases.

The following tutorial shows you how to create your own "Hello World" module and deploy it to a local testing/development server.

Set Up a Development Machine

In this step you will set up a test/development machine, which compiles LabKey Server from its source code.

If you already have a working build of the server, you can skip this step.

- If necessary, uninstall any instances of LabKey Server that were installed using the Windows Graphical Installer, as an installer-based server and a source-based server cannot run together simultaneously on the same machine. Use the Windows uninstaller at: Control Panel > Uninstall a program. If you see LabKey Server in the list of programs, uninstall it.

- Download the server source code and complete an initial build of the server by completing the steps in the following topic: Set up a Development Machine

- Before you proceed, build and deploy the server. Confirm that the server is running by visiting the URL http://localhost:8080/labkey/project/home/begin.view?

- For the purposes of this tutorial, we will call the location where you have synced the server source code LABKEY_SRC. On Windows, a typical location for LABKEY_SRC would be C:/dev/trunk

Module Properties

In this step you create the main directory for your module and set basic module properties.

- Go to LABKEY_SRC, the directory where you synced to the server source code, and locate the directory externalModules.

- Inside LABKEY_SRC/externalModules, create a directory named "helloworld".

- Inside the helloworld directory, create a file named "module.properties".

- Add the following property/value pairs to module.properties. This is a minimal list of properties needed for deployment and testing. You can add a more complete list of properties later on, including your name, links to documentation, required server and database versions, etc. For a complete list of available properties see Module Properties Reference.

Name: HelloWorld

ModuleClass: org.labkey.api.module.SimpleModule

Version: 1.0

Build and Deploy the Module

- Open the file LABKEY_SRC/server/standard.modules (This file controls which modules are included in the build.)

- Add this line to the file:

externalModules/helloworld

- Build the server.

- Open a command window.

- Go to directory LABKEY_SRC/server

- Call the ant task:

ant build

- Start the server, either in IntelliJ by click the "Debug" button, or by running the Tomcat startup script appropriate for your operating system (located in TOMCAT_HOME/bin).

Confirm the Module Has Been Deployed

- In a browser go to: http://localhost:8080/labkey/project/home/begin.view?

- Sign in.

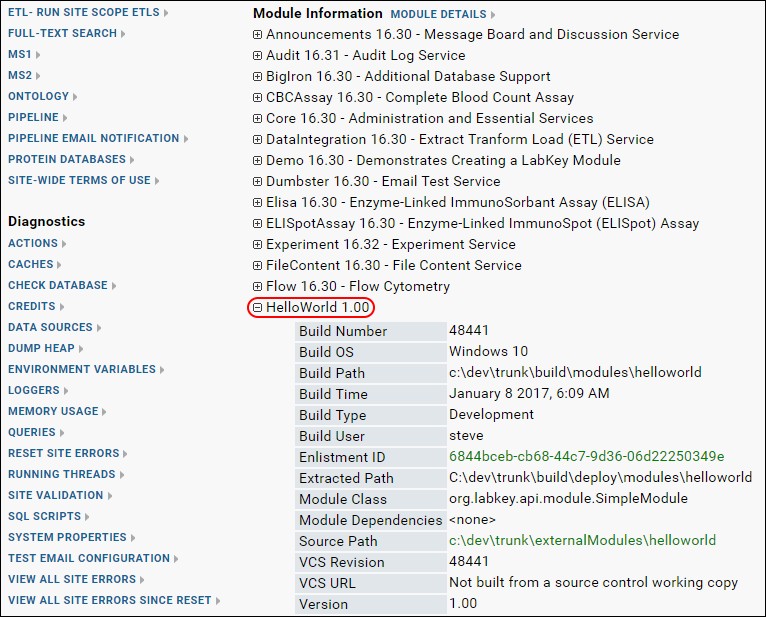

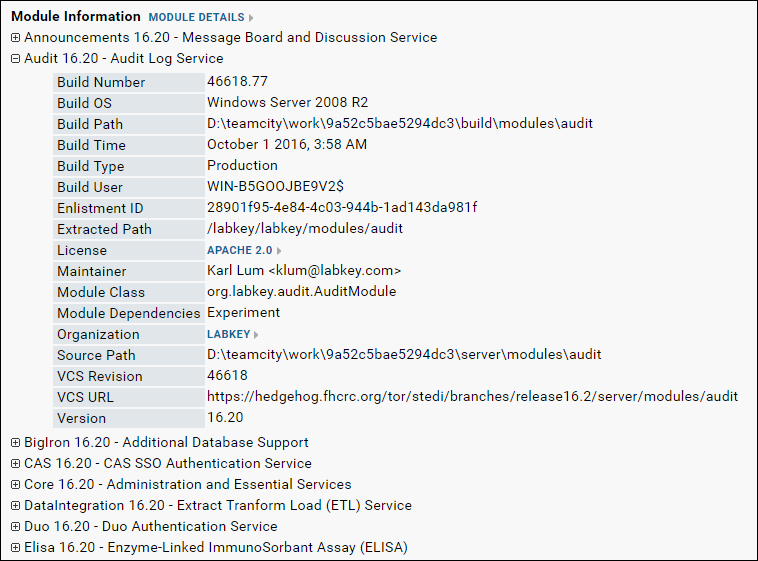

- Confirm that HelloWorld has been deployed to the server by going to Admin > Site > Admin Console. Scroll down to Module Information (in the right hand column). Open the node HelloWorld. Notice the module properties you specified are displayed here: Name: HelloWorld, Version: 1.0, etc.

Add a Default Page

Each module has a default home page called "begin.view". In this step we will add this page to our module. The server interprets your module resources based on a fixed directory structure. By reading the directory structure and the files inside, the server knows their intended functionality. For example, if the module contains a directory named "assays", this tells the server to look for XML files that define a new assay type. Below, we will create a "views" directory, telling the server to look for HTML and XML files that define new pages and web parts.

- Inside helloworld, create a directory named "resources".

- Inside resources, create a directory named "views".

- Inside views, create a file named "begin.html". (This is the default page for any module.)

helloworld

│ module.properties

└───resources

└───views

begin.html

- Open begin.html in a text editor, and add the following HTML code:

<p>Hello, World!</p>

Test the Module

- Build the server by calling 'ant build'.

- Wait for the server to redeploy.

- Enable the module in some test folder:

- Navigate to some test folder on your server.

- Go to Admin > Folder > Management and click the Folder Type tab.

- In the list of modules on the right, place a checkmark next to HelloWorld.

- Click Update Folder.

- Confirm that the view has been deployed to the server by going to Admin > Go to Module > HelloWorld.

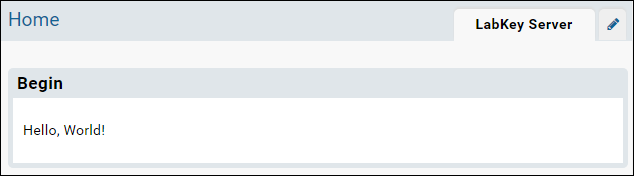

- The following view will be displayed:

Modify the View with Metadata

You can control how a view is displayed by using a metadata file. For example, you can define the title, framing, and required permissions.

- Add a file to the views directory named "begin.view.xml". Note that this file has the same name (minus the file extension) as begin.html: this tells the server to apply the metadata in begin.view.xml to being.html.

helloworld

│ module.properties

└───resources

└───views

begin.html

begin.view.xml

- Add the following XML to begin.view.xml. This tells the server to: display the title 'Begin View', display the HTML without any framing, and that Reader permission is required to view it.

<view xmlns="http://labkey.org/data/xml/view"

title="Begin View"

frame="none">

<permissions>

<permission name="read"/>

</permissions>

</view>

- Refresh your browser to see the result. (You do not need to rebuild or restart the server.)

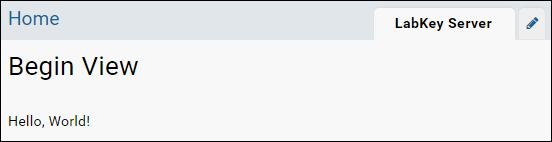

- The begin view now looks like the following:

- Experiment with other possible values for the 'frame' attribute:

- portal (If no value is provided, the default is 'portal'.)

- title

- dialog

- div

- left_navigation

- none

- When you are ready to move to the next step, set the 'frame' attribute back to 'portal'.

Hello World Web Part

You can also package the view as a web part using another metadata file.

- In the helloworld/resources/views directory add a file named "begin.webpart.xml". This tells the server to surface the view inside a webpart. Your module now has the following structure:

helloworld

│ module.properties

└───resources

└───views

begin.html

begin.view.xml

begin.webpart.xml

- Paste the following XML into begin.webpart.xml:

<webpart xmlns="http://labkey.org/data/xml/webpart"

title="Hello World Web Part">

<view name="begin"/>

</webpart>

- Return to your test folder using the hover menu in the upper left.

- In your test folder, click the dropdown <Select Web Part>.

- Select the web part Hello World Web Part and click Add.

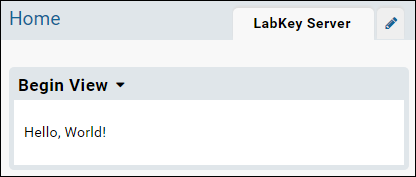

- The following web part will be added to the page:

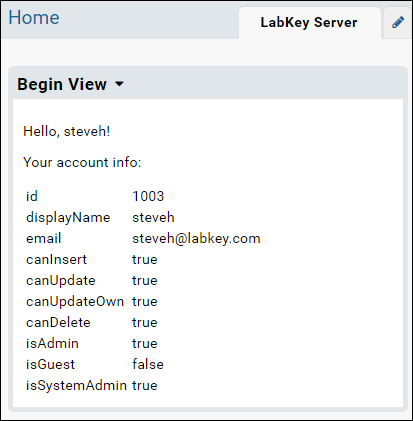

Hello User View

The final step provides more interesting view which uses the JavaScript API to retrieve information about the current users.

- Open begin.html and replace the HTML with the following.

- Refresh the browser to see the changes. (You can directly edit the file begin.html in the module -- the server will pick up the changes without needing to rebuild or restart.)

<p>Hello, <script>

document.write(LABKEY.Security.currentUser.displayName);

</script>!</p>

<p>Your account info: </p>

<table>

<tr><td>id</td><td><script>document.write(LABKEY.Security.currentUser.id); </script><td></tr>

<tr><td>displayName</td><td><script>document.write(LABKEY.Security.currentUser.displayName); </script><td></tr>

<tr><td>email</td><td><script>document.write(LABKEY.Security.currentUser.email); </script><td></tr>

<tr><td>canInsert</td><td><script>document.write(LABKEY.Security.currentUser.canInsert); </script><td></tr>

<tr><td>canUpdate</td><td><script>document.write(LABKEY.Security.currentUser.canUpdate); </script><td></tr>

<tr><td>canUpdateOwn</td><td><script>document.write(LABKEY.Security.currentUser.canUpdateOwn); </script><td></tr>

<tr><td>canDelete</td><td><script>document.write(LABKEY.Security.currentUser.canDelete); </script><td></tr>

<tr><td>isAdmin</td><td><script>document.write(LABKEY.Security.currentUser.isAdmin); </script><td></tr>

<tr><td>isGuest</td><td><script>document.write(LABKEY.Security.currentUser.isGuest); </script><td></tr>

<tr><td>isSystemAdmin</td><td><script>document.write(LABKEY.Security.currentUser.isSystemAdmin); </script><td></tr>

</table>

- Once you've refreshed the browser, the web part will display the following.

Make a .module File

You can distribute and deploy a module to production server by making a helloworld.module file (a renamed .zip file).

- In anticipation of deploying the module on a production server, add the property 'BuildType: Production' to the module.properties file:

Name: HelloWorld

ModuleClass: org.labkey.api.module.SimpleModule

Version: 1.0

BuildType: Production

- Then build the module:

ant build

- The build process creates a helloworld.module file at:

LABKEY_SRC/build/deploy/modules/helloworld.module

This file can be deployed by copying it to another server's externalModule directory. When the server detects changes in this directory, it will automatically unzip the .module file and deploy it. You may need to restart the server to fully deploy the module.

Related Topics

These tutorials show more functionality that you can package as a module:

Map of Module Files

Module Directories and Files

The following directory structure follows the pattern for modules as they are checked into source control. The structure of the module as deployed to the server is somewhat different, for details see below and the topic Module Properties Reference. If your module contains Java code or Java Server Pages (JSPs), you will need to compile it before it can be deployed.

Items shown in lowercase are literal values that should be preserved in the directory structure; items shown in UPPERCASE should be replaced with values that reflect the nature of your project.

MODULE_NAME │ module.properties docs │ module.xml docs, example └──resources ├───assay docs ├───config │ module.xml docs, example ├───credits docs, example ├───domain-templates docs ├───etls docs ├───folderTypes docs ├───olap example ├───pipeline docs, example ├───queries docs │ └───SCHEMA_NAME │ │ QUERY_NAME.js docs, example │ │ QUERY_NAME.query.xml docs, example │ │ QUERY_NAME.sql example │ └───QUERY_NAME │ VIEW_NAME.qview.xml docs, example ├───reports docs │ └───schemas │ └───SCHEMA_NAME │ └───QUERY_NAME │ MyRScript.r example │ MyRScript.report.xml docs, example │ MyRScript.rhtml docs │ MyRScript.rmd docs ├───schemas docs │ │ SCHEMA_NAME.xml example │ └───dbscripts │ ├───postgresql │ │ SCHEMA_NAME-X.XX-Y.YY.sql example │ └───sqlserver │ SCHEMA_NAME-X.XX-Y.YY.sql example ├───scripts docs, example ├───views docs │ VIEW_NAME.html example │ VIEW_NAME.view.xml example │ TITLE.webpart.xml example └───web docs └───MODULE_NAME SomeImage.jpg somelib.lib.xml SomeScript.js example

Module Layout - As Source

If you are developing your module inside the LabKey Server source, use the following layout. The standard build targets will automatically assemble the directories for deployment. In particular, the standard build target makes the following changes to the module layout:

- Moves the contents of /resources one level up into /mymodule.

- Uses module.properties to create the file config/module.xml via string replacement into an XML template file.

- Compiles the Java /src dir into the /lib directory.

mymodule

├───module.properties

├───resources

│ ├───assay

│ ├───etls

│ ├───folderTypes

│ ├───queries

│ ├───reports

│ ├───schemas

│ ├───views

│ └───web

└───src (for modules with Java code)

Module Layout - As Deployed

The standard build targets transform the source directory structure above into the form below for deployment to Tomcat.

mymodule

├───assay

├───config

│ └───module.xml

├───etls

├───folderTypes

├───lib (holds compiled Java code)

├───queries

├───reports

├───schemas

├───views

└───web

Related Topics

Example Modules

To acquire the source code for these modules, enlist in the LabKey Server open source project: Enlisting in the Version Control Project

| Module Location | Description / Highlights |

|---|---|

| server/customModules | This directory contains numerous client modules, in most cases Java modules. |

| server/modules | The core modules for LabKey Server are located here, containing the core server action code (written in Java). |

| server/test | The test module runs basic tests on the server. Contains many basic examples to clone from. |

| externalModules | Other client modules. |

Other Resources

- Creating a New Java Module - Using an Ant target, create a template Java module that you can build out from.

- Getting Started with the Demo Module - A sample module demonstrates Java module development.

- Trigger Scripts - Module-based trigger scripts, schemas created by SQL scripts.

- Community Modules - Descriptions of the modules in the standard distribution of LabKey Server.

Modules: Queries, Views and Reports

The Scenario

Suppose that you want to present a series of R reports, database queries, and HTML views. The end-goal is to deliver these to a client as a unit that can be easily added to their existing LabKey Server installation. Once added, end-users should not be able to modify the queries or reports, ensuring that they keep running as expected. The steps below show how to fulfill these requirements using a file-based module.

Steps:

- Module Directories Setup

- Module Query Views

- Module SQL Queries

- Module R Reports

- Module HTML and Web Parts

Use the Module on a Production Server

This tutorial is designed for developers who build LabKey Server from source. But even if you are not a developer and do not build the server from source, you can get a sense of how modules by work by installing the module that is the final product of this tutorial. To install the module, download reportDemo.module and copy the file into the directory LABKEY_HOME\externalModules (on a Windows machine this directory is typically located at C:\Program Files(x86)\LabKey Server\externalModules). Notice that the server will detect the .module file and unzip it, creating a directory called reportDemo, which is deployed to the server. Look inside reportDemo to see the resources that have been deployed to the server. Read through the steps of the tutorial to see how these resources are surfaced in the user interface.

First Step

Module Directories Setup

- queries - Holds SQL queries and views.

- reports - Holds R reports.

- views - Holds user interface files.

Set Up a Dev Machine

Complete the topics below. This will set up a machine that can build LabKey Server (and the proteomics tools) from source.

Install Sample Data

- Install the Proteomics sample data by following the instructions in these topics:

Create Directories

- Go to the externalModules/ directory, and create the following directory structure and standard.modules file:

reportDemo

│ module.properties

└───resources

├───queries

├───reports

└───views

Add the following contents to module.properties:

Module Class: org.labkey.api.module.SimpleModule

Name: ReportDemo

Build the Module

- Open the file module.properties and add the following line:

externalModules/reportDemo

- In a command shell, go to the 'server' directory, for example, 'cd C:\dev\labkey-src\trunk\server'.

- Call 'ant build' to build the module.

- Restart the server to deploy the module.

Enable Your Module in a Folder

To use a module, enable it in a folder.

- Go to the LabKey Server folder where you want add the module functionality.

- Select Admin -> Folder -> Management -> Folder Type tab.

- Under the list of Modules click on the check box next to ReportDemo to activate it in the current folder.

Start Over | Next Step

Module Query Views

- SQL queries on the database (.SQL files)

- Metadata on the above queries (.query.xml files).

- Named views on pre-existing queries (.qview.xml files)

- Trigger scripts attached to a query (.js files) - these scripts are run whenever there an event (insert, update, etc.) on the underlying table.

Additionally, if you wish to just create a default view that overrides the system generated one, be sure to just name the file as .qview.xml, so there is no actual name of the file. If you use default.qview.xml, this will create another view called "default", but it will not override the existing default.

Create an XML-based SQL Query

- Add two directories (ms2 and Peptides) and a file (High Prob Matches.qview.xml), as shown below.

- The directory structure tells LabKey Server that the view is in the "ms2" schema and on the "Peptides" table.

reportDemo │ module.properties └───resources ├───queries │ └───ms2 │ └───Peptides │ High Prob Matches.qview.xml │ ├───reports └───views

View Source

The view will display peptides with high Peptide Prophet scores (greater than or equal to 0.9).

- Save High Prob Matches.qview.xml with the following content:

<customView xmlns="http://labkey.org/data/xml/queryCustomView">

<columns>

<column name="Scan"/>

<column name="Charge"/>

<column name="PeptideProphet"/>

<column name="Fraction/FractionName"/>

</columns>

<filters>

<filter column="PeptideProphet" operator="gte" value="0.9"/>

</filters>

<sorts>

<sort column="PeptideProphet" descending="true"/>

</sorts>

</customView>

- The root element of the qview.xml file must be <customView> and you should use the namespace indicated.

- <columns> specifies which columns are displayed. Lookups columns can be included (e.g., "Fraction/FractionName").

- <filters> may contain any number of filter definitions. (In this example, we filter for rows where PeptideProphet >= 0.9). (docs: <filter>)

- <sorts> section will be applied in the order they appear in this section. In this example, we sort descending by the PeptideProphet column. To sort ascending simply omit the descending attribute.

See the View

To see the view on the ms2.Peptides table:

- Build and restart the server.

- Go to the Peptides table and click Grid Views -- the view High Prob Matches has been added to the list. (Admin > Developer Links > Schema Browser. Open ms2, scroll down to Peptides. Select Grid Views > High Prob Matches.)

Previous Step | Next Step

Module SQL Queries

If supplied, the metadata file should have the same name as the .sql file, but with a ".query.xml" extends (e.g., PeptideCounts.query.xml) (docs: query.xsd)

Below we will create two SQL queries in the ms2 schema.

- Add two .sql files in the queries/ms2 directory, as follows:

reportDemo │ module.properties └───resources ├───queries │ └───ms2 │ │ PeptideCounts.sql │ │ PeptidesWithCounts.sql │ └───Peptides │ High Prob Matches.qview.xml ├───reports └───views

Add the following contents to the files:

PeptideCounts.sql

SELECT

COUNT(Peptides.TrimmedPeptide) AS UniqueCount,

Peptides.Fraction.Run AS Run,

Peptides.TrimmedPeptide

FROM

Peptides

WHERE

Peptides.PeptideProphet >= 0.9

GROUP BY

Peptides.TrimmedPeptide,

Peptides.Fraction.Run

PeptidesWithCounts.sql

SELECT

pc.UniqueCount,

pc.TrimmedPeptide,

pc.Run,

p.PeptideProphet,

p.FractionalDeltaMass

FROM

PeptideCounts pc

INNER JOIN

Peptides p

ON (p.Fraction.Run = pc.Run AND pc.TrimmedPeptide = p.TrimmedPeptide)

WHERE pc.UniqueCount > 1

Note that the .sql files may contain spaces in their names.

See the SQL Queries

- Build and restart the server.

- To view your SQL queries, go to the schema browser at Admin -> Developer Links -> Schema Browser.

- On the left side, open the nodes ms2-> user-defined queries -> PeptideCounts.

Optionally, you can add metadata to these queries to enhance them. See Modules: Query Metadata.

Previous Step | Next Step

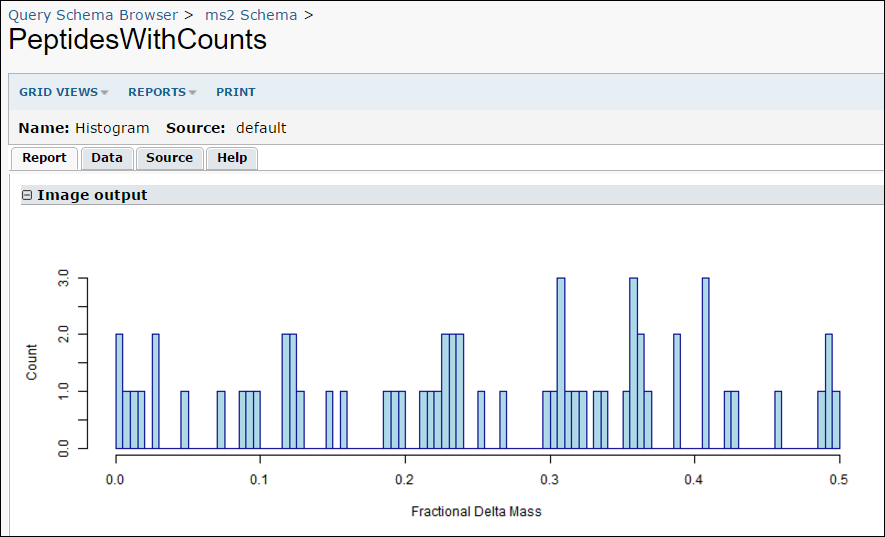

Module R Reports

Below we'll make an R report script that is associated with the PeptidesWithCounts query (created in the previous step).

- In the reports/ directory, create the following subdirectories: schemas/ms2/PeptidesWithCounts, and a file named "Histogram.r", as shown below:

- Open the Histogram.r file, enter the following script, and save the file. (Note that .r files may have spaces in their names.)

png(

filename="${imgout:labkeyl_png}",

width=800,

height=300)

hist(

labkey.data$fractionaldeltamass,

breaks=100,

xlab="Fractional Delta Mass",

ylab="Count",

main=NULL,

col = "light blue",

border = "dark blue")

dev.off()

Report Metadata

Optionally, you can add associated metadata about the report. See Modules: Report Metadata.

Test your SQL Query and R Report

- Go to the Query module's home page (Admin -> Go to Module -> Query). Note that the home page of the Query module is the Query Browser.

- Open the ms2 node, and see your two new queries in the user-defined queries section.

- Click on PeptidesWithCounts and then View Data to run the query and view the results.

- While viewing the results, you can run your R report by selecting Views -> Histogram.

Previous Step | Next Step

Module HTML and Web Parts

Since getting to the Query module's start page is not obvious for most users, we will provide an HTML view for a direct link to the query results. You can do this in a wiki page, but that must be created on the server, and our goal is to provide everything in the module itself. Instead we will create an HTML view and an associated web part.

Add an HTML Page

Under the views/ directory, create a new file named reportdemo.html, and enter the following HTML:

<p>

<a id="pep-report-link"

href="<%=contextPath%><%=containerPath%>/query-executeQuery.view?schemaName=ms2&query.queryName=PeptidesWithCounts">

Peptides With Counts Report</a>

</p>

Note that .html view files must not contain spaces in the file names. The view servlet expects that action names do not contain spaces.

Use contextPath and containerPath

Note the use of the <%=contextPath%> and <%=containerPath%> tokens in the URL's href attribute. These tokens will be replaced with the server's context path and the current container path respectively. For syntax details, see LabKey URLs.

Since the href in this case needs to refer to an action in another controller, we can't use a simple relative URL, as it would refer to another action in the same controller. Instead, use the contextPath token to get back to the web application root, and then build your URL from there.

Note that the containerPath token always begins with a slash, so you don't need to put a slash between the controller name and this token. If you do, it will still work, as the server automatically ignores double-slashes.

Define a View Wrapper

This file has the same base-name as the HTML file, "reportdemo", but with an extension of ".view.xml". In this case, the file should be called reportdemo.view.xml, and it should contain the following:

<view xmlns="http://labkey.org/data/xml/view"

frame="none" title="Report Demo">

</view>

Define a Web Part

To allow this view to be visible inside a web part create our final file, the web part definition. Create a file in the views/ directory called reportdemo.webpart.xml and enter the following content:

<webpart xmlns="http://labkey.org/data/xml/webpart" title="Report Demo">

<view name="reportdemo"/>

</webpart>

After creating this file, you should now be able to refresh the portal page in your folder and see the "Report Demo" web part in the list of available web parts. Add it to the page, and it should display the contents of the reportdemo.html view, which contains links to take users directly to your module-defined queries and reports.

Your directory structure should now look like this:

externalModules/

ReportDemo/

resources/

reports/

schemas/

ms2/

PeptidesWithCounts/

Histogram.r

queries/

ms2/

PeptideCounts.sql

PeptidesWithCounts.sql

Peptides/

High Prob Matches.qview.xml

views/

reportdemo.html

reportdemo.view.xml

reportdemo.webpart.xml

Set Required Permissions

You might also want to require specific permissions to see this view. That is easily added to the reportdemo.view.xml file like this:

<view xmlns="http://labkey.org/data/xml/view" title="Report Demo">

<permissions>

<permission name="read"/>

</permissions>

</view>

You may add other permission elements, and they will all be combined together, requiring all permissions listed. If all you want to do is require that the user is signed in, you can use the value of "login" in the name attribute.

The XSD for this meta-data file is view.xsd in the schemas/ directory of the project. The LabKey XML Schema Reference provides an easy way to navigate the documentation for view.xsd.

Previous Step

Modules: JavaScript Libraries

- Acquire the library .js file want to use.

- In your module resources directory, create a subdirectory named "web".

- Inside "web", create a subdirectory with the same name as your module. For example, if your module is named 'helloworld', create the following directory structure:

- Copy the library .js file into your directory structure. For example, if you wish to use a JQuery library, place the library file as shown below:

- For any HTML pages that use the library, create a .view.xml file, adding a "dependencies" section.

- For example, if you have a page called helloworld.html, then create a file named helloworld.view.xml next to it:

- Finally add the following "dependencies" section to the .view.xml file:

<view xmlns="http://labkey.org/data/xml/view" title="Hello, World!">

<dependencies>

<dependency path="helloworld/jquery-2.2.3.min.js"></dependency>

</dependencies>

</view>

Note: if you declare dependencies explicitly in the .view.xml file, you don't need to use LABKEY.requiresScript on the HTML page.

Remote Dependencies

In some cases, you can declare your dependency using an URL that points directly to the remote library, instead of copying the library file and distributing it with your module:

<dependency path="https://code.jquery.com/jquery-2.2.3.min.js"></dependency>Related Topics

Modules: Assay Types

Topics

- Tutorial: Define an Assay Type in a Module - Get started writing module-based assays.

- Assay Custom Domains - Create a custom result domain.

- Assay Custom Views - Add custom HTML details views.

- Example Assay JavaScript Objects - JavaScript examples for assay modules.

- Assay Query Metadata - Provide additional properties to a query.

- Transformation Scripts - Transform data before updates and inserts using scripts.

- Customize Batch Save Behavior - Customize batch save behavior using Java.

- Suggestions for Extensible Assays - An informative discussion from the LabKey Support Boards

Examples: Module-Based Assays

There are a handful of module-based assays in the LabKey SVN tree. You can find the modules in <LABKEY_ROOT>/server/customModules. Examples include:

- <LABKEY_ROOT>/server/customModules/exampleassay/resources/assay

- <LABKEY_ROOT>/server/customModules/iaviElisa/elisa/assay/elisa

- <LABKEY_ROOT>/server/customModules/idri/resources/assay/particleSize

File Structure

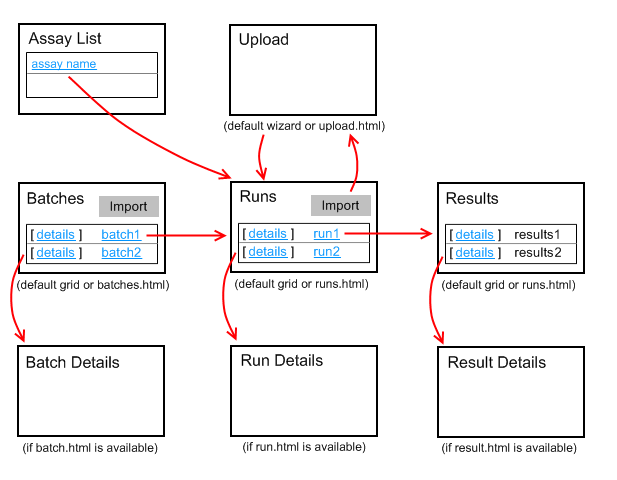

The assay consists of an assay config file, a set of domain descriptions, and view html files. The assay is added to a module by placing it in an assay directory at the top-level of the module. The assay has the following file structure:

The only required part of the assay is the <assay-name> directory. The config.xml, domain files, and view files are all optional.

This diagram shows the relationship between the pages. The details link will only appear if the corresponding details html view is available.

How to Specify an Assay "Begin" Page

Module-based assays can be designed to jump to a "begin" page instead of a "runs" page. If an assay has a begin.html in the assay/<name>/views/ directory, users are directed to this page instead of the runs page when they click on the name of the assay in the assay list.

Tutorial: Define an Assay Type in a Module

To create a module-based assay, you create a set of files that define the new assay design, describe the data import process, and define various types of assay views. The new assay is incorporated into your server when package these files as a module and restart your server. The new type of assay is then available on your server as the basis for new assay designs, in the same way that built-in assay types (e.g., Luminex) are available.

This tutorial explains how to incorporate a ready-made, module-based assay into your LabKey Server and make use of the new type of assay. It does not cover creation of the files that compose a module-based assay. Please refer to the "Related Topics" section below for instructions on how to create such files.

Download

First download a pre-packed .module file and deploy it to LabKey Server.

- Download exampleassay.module. (This is a renamed .zip archive the contains the source files for the assay module.)

Add the Module to your LabKey Server Installation

- On a local build of LabKey Server, copy exampleassay.module to a module deployment directory, such as <LABKEY_HOME>\build\deploy\modules\

- Or

- On a local install of LabKey Server, copy exampleassay.module to this location: <LABKEY_HOME>\externalModules\

- Restart your server. The server will explode the directory.

- Examine the files in the exploded directory. You will see the following structure:

exampleassay

└───assay

└───example

│ config.xml

│

├───domains

│ batch.xml

│ result.xml

│ run.xml

│

└───views

upload.html

- upload.html contains the UI that the user will see when importing data to this type of assay.

- batch.xml, result.xml, and run.xml provide the assay's design, i.e., the names of the fields, their data types, whether they are required fields, etc.

Enable the Module in a Folder

The assay module is now available through the UI. Here we enable the module in a folder.

- Create or select a folder to enable the module in, for example, a subfolder in the Home project.

- Select Admin > Folder > Management and then click the Folder Type tab.

- Place a checkmark next to the exampleassay module (under the "Modules" column on the right).

- Click the Update Folder button.

Use the Module's Assay Design

Next we create a new assay design based the module.

- Select Admin > Manage Assays.

- On the Assay List page, click New Assay Design.

- Select LabKey Example and click Next.

- Name this assay "FileBasedAssay"

- Leave all other fields at default values and click Save and Close.

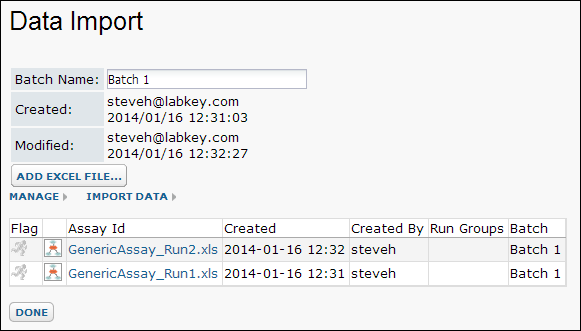

Import Data to the Assay Design

- Download these two sample assay data files:

- Click on the new FileBasedAssay in the Assay List.

- Click the Import Data button.

- Note that instead of the standard UI for importing data, you will see the custom UI for importing data defined by upload.html in exampleassay\assay\example\views.

- Also note the URL to the custom upload page. It follows the pattern: http://myserver.org/labkey/myproject/myfolder/assay-moduleAssayUpload.view?rowId=1

- Enter a value for Batch Name, for example, "Batch 1"

- Click Add Excel File and select GenericAssay_Run1.xls. (Wait a few seconds for the file to upload.)

- Notice that the Created and Modified fields are filled in automatically, as specified in the module-based assay's upload.html file.

- Click Import Data and repeat the import process for GenericAssay_Run2.xls.

- Click Done.

Review Imported Data

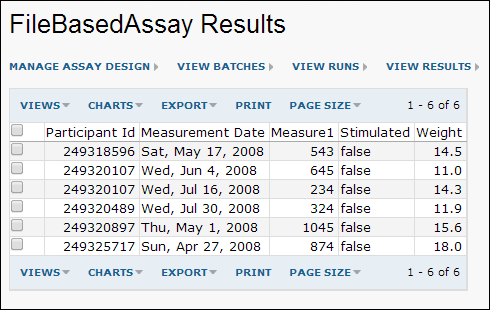

- Click on the first run (GenericAssayRun1.xls) to see the data it contains. You will see data similar to the following:

- You can now integrate this data into any available target studies.

Related Topics

Assay Custom Domains

An assay module can define a custom domain to replace LabKey's built-in default assay domains, by adding a schema definition in the domains/ directory. For example:

assay/<assay-name>/domains/<domain-name>.xml

The name of the assay is taken from the <assay-name> directory. The contents of <domain-name>.xml file contains the domain definition and conforms to the <domain> element from assayProvider.xsd, which is in turn a DomainDescriptorType from the expTypes.xsd XML schema. There are three built-in domains for assays: "batch", "run", and "result". This following result domain replaces the build-in result domain for assays:

result.xml

<ap:domain xmlns:exp="http://cpas.fhcrc.org/exp/xml"

xmlns:ap="http://labkey.org/study/assay/xml">

<exp:Description>This is my data domain.</exp:Description>

<exp:PropertyDescriptor>

<exp:Name>SampleId</exp:Name>

<exp:Description>The Sample Id</exp:Description>

<exp:Required>true</exp:Required>

<exp:RangeURI>http://www.w3.org/2001/XMLSchema#string</exp:RangeURI>

<exp:Label>Sample Id</exp:Label>

</exp:PropertyDescriptor>

<exp:PropertyDescriptor>

<exp:Name>TimePoint</exp:Name>

<exp:Required>true</exp:Required>

<exp:RangeURI>http://www.w3.org/2001/XMLSchema#dateTime</exp:RangeURI>

</exp:PropertyDescriptor>

<exp:PropertyDescriptor>

<exp:Name>DoubleData</exp:Name>

<exp:RangeURI>http://www.w3.org/2001/XMLSchema#double</exp:RangeURI>

</exp:PropertyDescriptor>

</ap:domain>

To deploy the module, the assay directory is zipped up as a <module-name>.module file and copied to the LabKey server's modules directory.

When you create a new assay design for that assay type, it will use the fields defined in the XML domain as a template for the corresponding domain. Changes to the domains in the XML files will not affect existing assay designs that have already been created.

Assay Custom Views

Add a Custom Details View

Suppose you want to add a [details] link to each row of an assay run table, that takes you to a custom details view for that row. You can add new views to the module-based assay by adding html files in the views/ directory, for example:

assay/<assay-name>/views/<view-name>.html

The overall page template will include JavaScript objects as context so that they're available within the view, avoiding an extra client API request to fetch it from the server. For example, the result.html page can access the assay definition and result data as LABKEY.page.assay and LABKEY.page.result respectively. Here is an example custom details view named result.html:

1 <table>

2 <tr>

3 <td class='labkey-form-label'>Sample Id</td>

4 <td><div id='SampleId_div'>???</div></td>

5 </tr>

6 <tr>

7 <td class='labkey-form-label'>Time Point</td>

8 <td><div id='TimePoint_div'>???</div></td>

9 </tr>

10 <tr>

11 <td class='labkey-form-label'>Double Data</td>

12 <td><div id='DoubleData_div'>???</div></td>

13 </tr>

14 </table>

15

16 <script type="text/javascript">

17 function setValue(row, property)

18 {

19 var div = Ext.get(property + "_div");

20 var value = row[property];

21 if (!value)

22 value = "<none>";

23 div.dom.innerHTML = value;

24 }

25

26 if (LABKEY.page.result)

27 {

28 var row = LABKEY.page.result;

29 setValue(row, "SampleId");

30 setValue(row, "TimePoint");

31 setValue(row, "DoubleData");

32 }

33 </script>

Note on line 28 the details view is accessing the result data from LABKEY.page.result. See Example Assay JavaScript Objects for a description of the LABKEY.page.assay and LABKEY.page.result objects.

Add a custom view for a run

Same as for the custom details page for the row data except the view file name is run.html and the run data will be available as the LABKEY.page.run variable. See Example Assay JavaScript Objects for a description of the LABKEY.page.run object.

Add a custom view for a batch

Same as for the custom details page for the row data except the view file name is batch.html and the run data will be available as the LABKEY.page.batch variable. See Example Assay JavaScript Objects for a description of the LABKEY.page.batch object.

Example Assay JavaScript Objects

LABKEY.page.assay:

The assay definition is available as LABKEY.page.assay for all of the html views. It is a JavaScript object, which is of type LABKEY.Assay.AssayDesign:

LABKEY.page.assay = {

"id": 4,

"projectLevel": true,

"description": null,

"name": <assay name>,

// domains objects: one for batch, run, and result.

"domains": {

// array of domain property objects for the batch domain

"<assay name> Batch Fields": [

{

"typeName": "String",

"formatString": null,

"description": null,

"name": "ParticipantVisitResolver",

"label": "Participant Visit Resolver",

"required": true,

"typeURI": "http://www.w3.org/2001/XMLSchema#string"

},

{

"typeName": "String",

"formatString": null,

"lookupQuery": "Study",

"lookupContainer": null,

"description": null,

"name": "TargetStudy",

"label": "Target Study",

"required": false,

"lookupSchema": "study",

"typeURI": "http://www.w3.org/2001/XMLSchema#string"

}

],

// array of domain property objects for the run domain

"<assay name> Run Fields": [{

"typeName": "Double",

"formatString": null,

"description": null,

"name": "DoubleRun",

"label": null,

"required": false,

"typeURI": "http://www.w3.org/2001/XMLSchema#double"

}],

// array of domain property objects for the result domain

"<assay name> Result Fields": [

{

"typeName": "String",

"formatString": null,

"description": "The Sample Id",

"name": "SampleId",

"label": "Sample Id",

"required": true,

"typeURI": "http://www.w3.org/2001/XMLSchema#string"

},

{

"typeName": "DateTime",

"formatString": null,

"description": null,

"name": "TimePoint",

"label": null,

"required": true,

"typeURI": "http://www.w3.org/2001/XMLSchema#dateTime"

},

{

"typeName": "Double",

"formatString": null,

"description": null,

"name": "DoubleData",

"label": null,

"required": false,

"typeURI": "http://www.w3.org/2001/XMLSchema#double"

}

]

},

"type": "Simple"

};LABKEY.page.batch:

The batch object is available as LABKEY.page.batch on the upload.html and batch.html pages. The JavaScript object is an instance of LABKEY.Exp.RunGroup and is shaped like:

LABKEY.page.batch = new LABKEY.Exp.RunGroup({

"id": 8,

"createdBy": <user name>,

"created": "8 Apr 2009 12:53:46 -0700",

"modifiedBy": <user name>,

"name": <name of the batch object>,

"runs": [

// array of LABKEY.Exp.Run objects in the batch. See next section.

],

// map of batch properties

"properties": {

"ParticipantVisitResolver": null,

"TargetStudy": null

},

"comment": null,

"modified": "8 Apr 2009 12:53:46 -0700",

"lsid": "urn:lsid:labkey.com:Experiment.Folder-5:2009-04-08+batch+2"

});

LABKEY.page.run:

The run detail object is available as LABKEY.page.run on the run.html pages. The JavaScript object is an instance of LABKEY.Exp.Run and is shaped like:

LABKEY.page.run = new LABKEY.Exp.Run({

"id": 4,

// array of LABKEY.Exp.Data objects added to the run

"dataInputs": [{

"id": 4,

"created": "8 Apr 2009 12:53:46 -0700",

"name": "run01.tsv",

"dataFileURL": "file:/C:/Temp/assaydata/run01.tsv",

"modified": null,

"lsid": <filled in by the server>

}],

// array of objects, one for each row in the result domain

"dataRows": [

{

"DoubleData": 3.2,

"SampleId": "Monkey 1",

"TimePoint": "1 Nov 2008 11:22:33 -0700"

},

{

"DoubleData": 2.2,

"SampleId": "Monkey 2",

"TimePoint": "1 Nov 2008 14:00:01 -0700"

},

{

"DoubleData": 1.2,

"SampleId": "Monkey 3",

"TimePoint": "1 Nov 2008 14:00:01 -0700"

},

{

"DoubleData": 1.2,

"SampleId": "Monkey 4",

"TimePoint": "1 Nov 2008 00:00:00 -0700"

}

],

"createdBy": <user name>,

"created": "8 Apr 2009 12:53:47 -0700",

"modifiedBy": <user name>,

"name": <name of the run>,

// map of run properties

"properties": {"DoubleRun": null},

"comment": null,

"modified": "8 Apr 2009 12:53:47 -0700",

"lsid": "urn:lsid:labkey.com:SimpleRun.Folder-5:cf1fea1d-06a3-102c-8680-2dc22b3b435f"

});

LABKEY.page.result:

The result detail object is available as LABKEY.page.result on the result.html page. The JavaScript object is a map for a single row and is shaped like:

LABKEY.page.result = {

"DoubleData": 3.2,

"SampleId": "Monkey 1",

"TimePoint": "1 Nov 2008 11:22:33 -0700"

};Assay Query Metadata

Query Metadata for Assay Tables

You can associate query metadata with an individual assay design, or all assay designs that are based on the same type of assay (e.g., "NAb" or "Viability").

Example. Assay table names are based upon the name of the assay design. For example, consider an assay design named "Example" that is based on the "Viability" assay type. This design would be associated with three tables in the schema explorer: "Example Batches", "Example Runs", and "Example Data."

Associate metadata with a single assay design. To attach query metadata to the "Example Data" table, you would normally create a /queries/assay/Example Data.query.xml metadata file. This would work well for the "Example Data" table itself. However, this method would not allow you to re-use this metadata file for a new assay design that is also based on the same assay type ("Viability" in this case).

Associate metadata with all assay designs based on a particular assay type. To permit re-use of the metadata, you need to create a query metadata file whose name is based upon the assay type and table name. To continue our example, you would create a query metadata file callled /assay/Viability/queries/Data.query.xml to attach query metadata to all data tables based on the Viability-type assay.

As with other query metadata in module files, the module must be activated (in other words, the appropriate checkbox must be checked) in the folder's settings.

See Modules: Queries, Views and Reports and Modules: Query Metadata for more information on query metadata.

Customize Batch Save Behavior

The AssaySaveHandler interface enables file-based assays to extend the functionality of the SaveAssayBatch action with Java code. A file-based assay can provide an implementation of this interface by creating a Java-based module and then putting the class under the module's src directory. This class can then be referenced by name in the <saveHandler/> element in the assay's config file. For example, an entry might look like:

<saveHandler>org.labkey.icemr.assay.tracking.TrackingSaveHandler</saveHandler>.

To implement this functionality:

- Create the skeleton framework for a Java module. This consists of a controller class, manager, etc. See Creating a New Java Module for details on autogenerating the boiler plate Java code.

- Add an assay directory underneath the Java src directory that corresponds to the file-based assay you want to extend. For example: myModule/src/org.labkey.mymodule/assay/tracking

- Implement the AssaySaveHandler interface. You can choose to either implement the interface from scratch or extend default behavior by having your class inherit from the DefaultAssaySaveHandler class. If you want complete control over the JSON format of the experiment data you want to save, you may choose to implement the AssaySaveHandler interface entirely. If you want to follow the pre-defined LABKEY experiment JSON format, then you can inherit from the DefaultAssaySaveHandler class and only override the specific piece you want to customize. For example, you may want custom code to run when a specific property is saved. (See below for more implementation details.)

- Reference your class in the assay's config.xml file. For example, notice the <ap:saveHandler/> entry below. If a non-fully-qualified name is used (as below) then LabKey Server will attempt to find this class under org.labkey.[module name].assay.[assay name].[save handler name].

<ap:provider xmlns:ap="http://labkey.org/study/assay/xml">

<ap:name>Flask Tracking</ap:name>

<ap:description>

Enables entry of a set of initial samples and then tracks

their progress over time via a series of daily measurements.

</ap:description>

<ap:saveHandler>TrackingSaveHandler</ap:saveHandler>

<ap:fieldKeys>

<ap:participantId>Run/PatientId</ap:participantId>

<ap:date>MeasurementDate</ap:date>

</ap:fieldKeys>

</ap:provider>

- The interface methods are invoked when the user chooses to import data into the assay or otherwise calls the SaveAssayBatch action. This is usually invoked by the Experiment.saveBatch JavaScript API. On the server, the file-based assay provider will look for an AssaySaveHandler specified in the config.xml and invoke its functions. If no AssaySaveHandler is specfied then the DefaultAssaySaveHandler implementation is used.

SaveAssayBatch Details

The SaveAssayBatch function creates a new instance of the SaveHandler for each request. SaveAssayBatch will dispatch to the methods of this interface according to the format of the JSON Experiment Batch (or run group) sent to it by the client. If a client chooses to implement this interface directly then the order of method calls will be:

- beforeSave

- handleBatch

- afterSave

- beforeSave

- handleBatch

- handleProperties (for the batch)

- handleRun (for each run)

- handleProperties (for the run)

- handleProtocolApplications

- handleData (for each data output)

- handleProperties (for the data)

- handleMaterial (for each input material)

- handleProperties (for the material)

- handleMaterial (for each output material)

- handleProperties (for the material)

- afterSave

Related Topics

SQL Scripts for Module-Based Assays

Some options:

1) Manually import a list archive into the target folder.

2) Add the tables via SQL scripts included in the module. To insert data: use SQL DML scripts or create an initialize.html view that populates the table using LABKEY.Query.insertRows().

To add the supporting table using SQL scripts, add a schemas directory, as a sibling to the assay directory, as shown below.

exampleassay

├───assay

│ └───example

│ │ config.xml

│ │

│ ├───domains

│ │ batch.xml

│ │ result.xml

│ │ run.xml

│ │

│ └───views

│ upload.html

│

└───schemas

│ SCHEMA_NAME.xml

│

└───dbscripts

├───postgresql

│ SCHEMA_NAME-X.XX-Y.YY.sql

└───sqlserver

SCHEMA_NAME-X.XX-Y.YY.sql

To support only one database, include a script only for that database, and configure your module properties accordingly -- see "SupportedDatabases" in Module Properties Reference.

LabKey Server does not currently support adding assay types or lists via SQL scripts, but you can create a new schema to hold the table, for example, the following script creates a new schema called "myreagents" (on PostgreSQL):

DROP SCHEMA IF EXISTS myreagents CASCADE;

CREATE SCHEMA myreagents;

CREATE TABLE myreagents.Reagents

(

RowId SERIAL NOT NULL,

ReagentName VARCHAR(30) NOT NULL

);

ALTER TABLE ONLY myreagents.Reagents

ADD CONSTRAINT Reagents_pkey PRIMARY KEY (RowId);

INSERT INTO myreagents.Reagents (ReagentName) VALUES ('Acetic Acid');

INSERT INTO myreagents.Reagents (ReagentName) VALUES ('Baeyers Reagent');

INSERT INTO myreagents.Reagents (ReagentName) VALUES ('Carbon Disulfide');

Update the assay domain, adding a lookup/foreign key property to the Reagents table:

<exp:PropertyDescriptor>

<exp:Name>Reagent</exp:Name>

<exp:Required>false</exp:Required>

<exp:RangeURI>http://www.w3.org/2001/XMLSchema#int</exp:RangeURI>

<exp:Label>Reagent</exp:Label>

<exp:FK>

<exp:Schema>myreagents</exp:Schema>

<exp:Query>Reagents</exp:Query>

</exp:FK>

</exp:PropertyDescriptor>

If you'd like to allow admins to add/remove fields from the table, you can add an LSID column to your table and make it a foreign key to the exp.Object.ObjectUri column in the schema.xml file. This will allow you to define a domain for the table much like a list. The domain is per-folder so different containers may have different sets of fields.

For example, see customModules/reagent/resources/schemas/reagent.xml. It wires up the LSID lookup to the exp.Object.ObjectUri column

<ns:column columnName="Lsid">

<ns:datatype>lsidtype</ns:datatype>

<ns:isReadOnly>true</ns:isReadOnly>

<ns:isHidden>true</ns:isHidden>

<ns:isUserEditable>false</ns:isUserEditable>

<ns:isUnselectable>true</ns:isUnselectable>

<ns:fk>

<ns:fkColumnName>ObjectUri</ns:fkColumnName>

<ns:fkTable>Object</ns:fkTable>

<ns:fkDbSchema>exp</ns:fkDbSchema>

</ns:fk>

</ns:column>

...and adds an "Edit Fields" button that opens the domain editor.

function editDomain(queryName)

{

var url = LABKEY.ActionURL.buildURL("property", "editDomain", null, {

domainKind: "ExtensibleTable",

createOrEdit: true,

schemaName: "myreagents",

queryName: queryName

});

window.location = url;

}

Transformation Scripts

Topics

- Use Transformation Scripts

- How Transformation Scripts Work

- Example Workflow: Develop a Transformation Script (perl)

- Example Transformation Scripts (perl)

- Transformation Scripts in R

- Transformation Scripts in Java

- Transformation Scripts for Module-based Assays

- Run Properties Reference

- Transformation Script Substitution Syntax

- Warnings in Tranformation Scripts

Use Transformation Scripts

Each assay design can be associated with one or more validation or transformation scripts which are run in the order specified. The script file extension (.r, .pl, etc) identifies the script engine that will be used to run the transform script. For example: a script named test.pl will be run with the Perl scripting engine. Before you can run validation or transformation scripts, you must configure the necessary Scripting Engines.

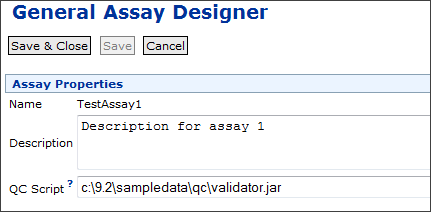

To specify a transform script in an assay design, you enter the full path including the file extension.

- Open the assay designer for a new assay, or edit an existing assay design.

- Click Add Script.

- Enter the full path to the script in the Transform Scripts field.

- You may enter multiple scripts by clicking Add Script again.

- Confirm that other Properties required by your assay type are correctly specified.

- Click Save and Close.

When you import (or re-import) run data using this assay design, the script will be executed. When you are developing or debugging transform scripts, you can use the Save Script Data option to store the files generated by the server that are passed to the script. Once your script is working properly, uncheck this box to avoid unnecessarily cluttering your disk.

A few notes on usage:

- Client API calls are not supported in transform scripts.

- Columns populated by transform scripts must already exist in the assay definition.

- Executed scripts show up in the experimental graph, providing a record that transformations and/or quality control scripts were run.

- Transform scripts are run before field-level validators.

- The script is invoked once per run upload.

- Multiple scripts are invoked in the order they are listed in the assay design.

The general purpose assay tutorial includes another example use of a transformation script in Set up a Data Transformation Script.

How Transformation Scripts Work

Script Execution Sequence

Transformation and validation scripts are invoked in the following sequence:

- A user uploads assay data.

- The server creates a runProperties.tsv file and rewrites the uploaded data in TSV format. Assay-specific properties and files from both the run and batch levels are added. See Run Properties Reference for full lists of properties.

- The server invokes the transform script by passing it the information created in step 2 (the runProperties.tsv file).

- After script completion, the server checks whether any errors have been written by the transform script and whether any data has been transformed.

- If transformed data is available, the server uses it for subsequent steps; otherwise, the original data is used.

- If multiple transform scripts are specified, the server invokes the other scripts in the order in which they are defined.

- Field-level validator/quality-control checks (including range and regular expression validation) are performed. (These field-level checks are defined in the assay definition.)

- If no errors have occurred, the run is loaded into the database.

Passing Run Properties to Transformation Scripts

Information on run properties can be passed to a transform script in two ways. You can put a substitution token into your script to identify the run properties file, or you can configure your scripting engine to pass the file path as a command line argument. See Transformation Script Substitution Syntax for a list of available substitution tokens.

For example, using perl:

Option #1: Put a substitution token (${runInfo}) into your script and the server will replace it with the path to the run properties file. Here's a snippet of a perl script that uses this method:

# Open the run properties file. Run or upload set properties are not used by

# this script. We are only interested in the file paths for the run data and

# the error file.

open my $reportProps, '${runInfo}';

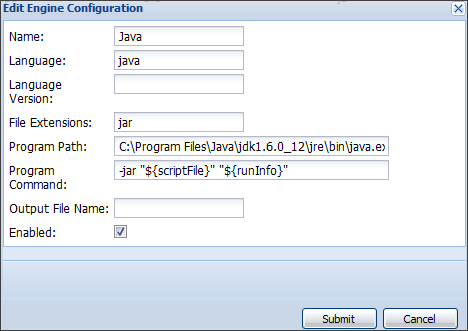

Option #2: Configure your scripting engine definition so that the file path is passed as a command line argument:

- Go to Admin > Site > Admin Console.

- Select the Views and Scripting.

- Select and edit the perl engine.

- Add ${runInfo} to the Program Command field.

Example Workflow: Develop a Transformation Script (perl)

- transform run data

- transform run properties

Script Engine Setup

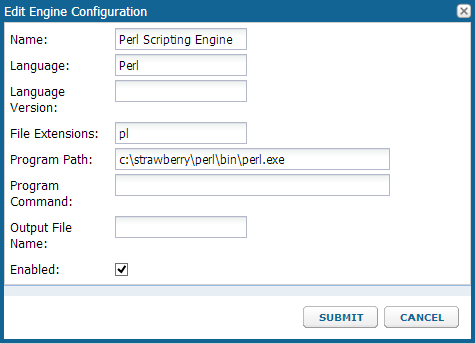

Before you can develop or run validation or transform scripts, configure the necessary Scripting Engines. You only need to set up a scripting engine once per type of script. You will need a copy of Perl running on your machine to set up the engine.

- Select Admin > Site > Admin Console.

- Click Views and Scripting.

- Click Add > New Perl Engine.

- Fill in as shown, specifying the "pl" extension and full path to the perl executable.

- Click Submit.

Add a Script to the Assay Design

Create a new empty .pl file in the development location of your choice and include it in your assay design.

- Navigate to the Assay Tutorial.

- Click GenericAssay in the Assay List web part.

- Select Manage Assay Design > copy assay design.

- Click Copy to Current Folder.

- Enter a new name, such as "TransformedAssay".

- Click Add Script and type the full path to the new script file you are creating.

- Check the box for Save Script Data.

- Confirm that the batch, run, and data fields are correct.

- Click Save and Close.

Obtain Test Data

To assist in writing your transform script, you will next obtain sample "runData.tsv" and "runProperties.tsv" files showing the state of your data import 'before' the transform script would be applied. To generate useful test data, you need to import a data run using the new assay design.

- Open and select the following file (if you have already imported this file during the tutorial, you will first need to delete that run):

LabKeyDemoFiles/Assays/Generic/GenericAssay_Run4.xls

- Click Import Data.

- Select the TransformedAssay design you just defined, then click Import.

- Click Next, then Save and Finish.

- When the import completes, select Manage Assay Design > edit assay design.

- You will now see a Download Test Data button that was not present during initial assay design.

- Click it and unzip the downloaded "sampleQCData" package to see the .tsv files.

- Open the "runData.tsv" file to view the current fields.

Date VisitID ParticipantID M3 M2 M1 SpecimenID

12/17/2013 1234 demo value 1234 1234 1234 demo value

12/17/2013 1234 demo value 1234 1234 1234 demo value

12/17/2013 1234 demo value 1234 1234 1234 demo value

12/17/2013 1234 demo value 1234 1234 1234 demo value

12/17/2013 1234 demo value 1234 1234 1234 demo value

Save Script Data

Typically transform and validation script data files are deleted on script completion. For debug purposes, it can be helpful to be able to view the files generated by the server that are passed to the script. When the Save Script Data checkbox is checked, files will be saved to a subfolder named: "TransformAndValidationFiles", in the same folder as the original script. Beneath that folder are subfolders for the AssayId, and below that a numbered directory for each run. In that nested subdirectory you will find a new "runDataFile.tsv" that will contain values from the run file plugged into the current fields.

participantid Date M1 M2 M3

249318596 2008-06-07 00:00 435 1111 15.0

249320107 2008-06-06 00:00 456 2222 13.0

249320107 2008-03-16 00:00 342 3333 15.0

249320489 2008-06-30 00:00 222 4444 14.0

249320897 2008-05-04 00:00 543 5555 32.0

249325717 2008-05-27 00:00 676 6666 12.0

Define the Desired Transformation

The runData.tsv file gives you the basic fields layout. Decide how you need to modify the default data. For example, perhaps for our project we need an adjusted version of the value in the M1 field - we want the doubled value available as an integer.

Add Required Fields to the Assay Design

- Select Manage Assay Design > edit assay design.

- Scroll down to the Data Fields section and click Add Field.

- Enter "AdjustM1", "Adjusted M1", and select type "Integer".

- Click Save and Close.

Write a Script to Transform Run Data

Now you have the information you need to write and refine your transformation script. Open the empty script file and paste the contents of the Modify Run Data box from this page: Example Transformation Scripts (perl).

Iterate over the Sample Run

Re-import the same run using the transform script you have defined.

- From the run list, select the run and click Re-import Run.

- Click Next.

- Under Run Data, click Use the data file(s) already uploaded to the server.

- Click Save and Finish.

The results now show the new field populated with the Adjusted M1 value.

Until the results are as desired, you will edit the script and use Reimport Run to retry.

Once your transformation script is working properly, re-edit the assay design one more time to uncheck the Save Script Data box - otherwise your script will continue to generate artifacts with every run and could eventually fill your disk.

Debugging Transformation Scripts

If your script has errors that prevent import of the run, you will see red text in the Run Properties window. If you fail to select the correct data file, for example:

If you have a type mismatch error between your script results and the defined destination field, you will see an error like:

Errors File

If the validation script needs to report an error that is displayed by the server, it adds error records to an error file. The location of the error file is specified as a property entry in the run properties file. The error file is in a tab-delimited format with three columns:

- type: error, warning, info, etc.

- property: (optional) the name of the property that the error occurred on.

- message: the text message that is displayed by the server.

| type | property | message |

|---|---|---|

| error | runDataFile | A duplicate PTID was found : 669345900 |

| error | assayId | The assay ID is in an invalid format |

Example Transformation Scripts (perl)

- Modify Run Data

- Modify Run Properties

Modify Run Data

This script is used in the Example Workflow: Develop a Transformation Script (perl) and populates a new field with data derived from an existing field in the run.

#!/usr/local/bin/perl

use strict;

use warnings;

# Open the run properties file. Run or upload set properties are not used by

# this script. We are only interested in the file paths for the run data and

# the error file.

open my $reportProps, '${runInfo}';

my $transformFileName = "unknown";

my $dataFileName = "unknown";

my %transformFiles;

# Parse the data file properties from reportProps and save the transformed data location

# in a map. It's possible for an assay to have more than one transform data file, although

# most will only have a single one.

while (my $line=<$reportProps>)

{

chomp($line);

my @row = split(/t/, $line);

if ($row[0] eq 'runDataFile')

{

$dataFileName = $row[1];

# transformed data location is stored in column 4

$transformFiles= $row[3];

}

}

my $key;

my $value;

my $adjustM1 = 0;

# Read each line from the uploaded data file and insert new data (double the value in the M1 field)

# into an additional column named 'Adjusted M1'. The additional column must already exist in the assay

# definition and be of the correct type.

while (($key, $value) = each(%transformFiles)) {

open my $dataFile, $key or die "Can't open '$key': $!";

open my $transformFile, '>', $value or die "Can't open '$value': $!";

my $line=<$dataFile>;

chomp($line);

$line =~ s/r*//g;

print $transformFile $line, "\t", "Adjusted M1", "\n";

while (my $line=<$dataFile>)

{

$adjustM1 = substr($line, 27, 3) * 2;

chomp($line);

$line =~ s/r*//g;

print $transformFile $line, "\t", $adjustM1, "\n";

}

close $dataFile;

close $transformFile;

}

Modify Run Properties

You can also define a transform script that modifies the run properties, as show in this example which parses the short filename out of the full path:

#!/usr/local/bin/perl

use strict;

use warnings;

# open the run properties file, run or upload set properties are not used by

# this script, we are only interested in the file paths for the run data and

# the error file.

open my $reportProps, $ARGV[0];

my $transformFileName = "unknown";

my $uploadedFile = "unknown";

while (my $line=<$reportProps>)

{

chomp($line);

my @row = split(/\t/, $line);

if ($row[0] eq 'transformedRunPropertiesFile')

{

$transformFileName = $row[1];

}

if ($row[0] eq 'runDataUploadedFile')

{

$uploadedFile = $row[1];

}

}

if ($transformFileName eq 'unknown')

{

die "Unable to find the transformed run properties data file";

}

open my $transformFile, '>', $transformFileName or die "Can't open '$transformFileName': $!";

#parse out just the filename portion

my $i = rindex($uploadedFile, "\\") + 1;

my $j = index($uploadedFile, ".xls");

#add a value for fileID

print $transformFile "FileID", "\t", substr($uploadedFile, $i, $j-$i), "\n";

close $transformFile;

Transformation Scripts in R

Overview

Users importing instrument-generated tabular datasets into LabKey Server may run into the following difficulties:

- Instrument-generated files often contain header lines before the main dataset, denoted by a leading # or ! or other symbol. These lines usually contain useful metadata about the protocol or reagents or samples tested, and in any case need to be skipped over to find the main data set.

- The file format is optimized for display, not for efficient storage and retrieval. For example, columns that correspond to individual samples are difficult to work with in a database.

- The data to be imported contains the display values from a lookup column, which need to be mapped to the foreign key values for storage.

First we review the way to hookup a transform script to an assay and the communications mechanisms between the assay framework and a transform script in R.

Identifying the Path to the Script File

Transform scripts are designated as part of a assay by providing a fully qualified path to the script file in the field named at the top of the assay instance definition. A convenient location to put the script file is to upload it using a File web part defined in the same folder as the assay definition. Then the fully qualified path to the script file is the concatenation of the file root for the folder (for example, "C:\lktrunk\build\deploy\files\MyAssayFolderName\@files\", as determined by the Files page in the Admin console) plus the file path to the script file as seen in the File web part (for example, "scripts\LoadData.R". For the file path, LabKey Server accepts the use of either backslashes (the default Windows format) or forward slashes.

When working on your own developer workstation, you can put the script file wherever you like, but putting it within the scope of the File manager will make it easier to deploy to a server. It also makes iterative development against a remote server easier, since you can use a Web-DAV enabled file editor to directly edit the same file that the server is calling.

If your transform script calls other script files to do its work, the normal way to pull in the source code is using the source statement, for example

source("C:\lktrunk\build\deploy\files\MyAssayFolderName\@files\Utils.R")But to keep the scripts so that they are easily moved to other servers, it is better to keep the script files together and the built-in substitution token "${srcDirectory}" which the server automatically fills in to be the directory where the called script file is located , for example:

source("${srcDirectory}/Utils.R");Accessing and Using the Run Properties File

The primary mechanism for communication between the LabKey Assay framework and the Transform script is the Run Properties file. Again a substitution token tells the script code where to find this file. The script file should contain a line like

rpPath<- "${runInfo}"When the script is invoked by the assay framework, the rpPath variable will contain the fully qualified path to the run properties file.

The run properties file contains three categories of properties:

1. Batch and run properties as defined by the user when creating an assay instance. These properties are of the format: <property name> <property value> <java data type>

for example,

gDarkStdDev 1.98223 java.lang.DoubleWhen the transform script is called these properties will contain any values that the user has typed into the corresponding text box under the “Batch Properties” of “Run Properties” sections of the upload form. The transform script can assign or modify these properties based on calculations or by reading them from the raw data file from the instrument. The script must then write the modified properties file to the location specified by the transformedRunPropertiesFile property (see #3 below)

2. Context properties of the assay such as assayName, runComments, and containerPath. These are recorded in the same format as the user-defined batch and run properties, but they cannot be overwritten by the script.

3. Paths to input and output files. These are fully qualified paths that the script reads from or writes to. They are in a <property name> <property value> format without property types. The paths currently used are:

- a. runDataUploadedFile: the raw data file that was selected by the user and uploaded to the server as part of an import process. This can be an Excel file, a tab-separated text file, or a comma-separated text file.

- b. runDataFile: the imported data file after the assay framework has attempted to convert the file to .tsv format and match its columns to the assay data result set definition. The path will point to a subfolder below the script file directory, with a path value similar to <property value> <java property type>. The AssayId_22\42 part of the directory path serves to separate the temporary files from multiple executions by multiple scripts in the same folder.

C:\lktrunk\build\deploy\files\transforms\@files\scripts\TransformAndValidationFiles\AssayId_22\42\runDataFile.tsv

- c. AssayRunTSVData: This file path is where the result of the transform script will be written. It will point to a unique file name in an “assaydata” directory that the framework creates at the root of the files tree. NOTE: this property is written on the same line as the runDataFile property.

- d. errorsFile: This path is where a transform or validation script can write out error messages for use in troubleshooting. Not normally needed by an R script because the script usually writes errors to stdout, which are written by the framework to a file named “<scriptname>.Rout”.