Start Here

Install the Server

Access the Server

Set Up a Folder & its Tools

Learn User Basics

Learn Admin Basics

Extend LabKey Server

Learn What's New in 9.1

9.1 Upgrade Tips

Learn What's New in 9.2

9.2 Upgrade Tips

Tutorials and Online Demos

Webinars and Videos

Roadmap for the Future

Administration

Installs and Upgrades

Before You Install

Install LabKey via Installer

Install LabKey Manually

Install Required Components

Configure the Web Application

Modify the Configuration File

Supported Tomcat Versions

Third-Party Components and Licenses

Manual install of caBIG™

Upgrade LabKey

Manual Upgrade

Upgrade PostgreSQL

Configure LDAP

Set Up MS Search Engines

Install the Enterprise Pipeline

Prerequisites for the Enterprise Pipeline

RAW to mzXML Converters

JMS Queue

Globus GRAM Server

Create a New Globus GRAM user

Configure LabKey Server to use the Enterprise Pipeline

Edit and Test Configuration

Using the Enterprise Pipeline

Configure the Conversion Service

Troubleshooting the Enterprise Pipeline

Install the Perl-Based MS2 Cluster Pipeline

Install the mzXML Conversion Service

Run the MS2 Cluster Pipeline

Example Setups and Configurations

Install CPAS on Linux

Example Installation of Flow Cytometry on Mac OSX

Configure FTP on Linux

Configure R on Linux

Configure the Virtual Frame Buffer on Linux

Set Up R

Set Up OpenSSO

Draft Material for OpenSSO

Customize "Look and Feel"

Troubleshooting

Projects and Folders

Create Project or Folder

Hidden Folders

Customize Folder

Reasons to Choose a "Custom"-Type Folder

Set Permissions

Manage Project Members

Navigate Folder Hierarchy

Move/Rename/Delete/Hide

Access Module Services

Add Web Parts

Manage Web Parts

Establish Terms of Use for Project

Security and Accounts

Site Administrator

Hide Admin Menus

User Accounts

Add Users

Manage Users

My Account

Anonymous Users

Security Groups

Global Groups

Project Groups

Site Groups

How Permissions Work

Permission Levels for Roles

Test Security Settings by Impersonating Users

Passwords

Authentication

Basic Authentication

Single Sign-On Overview

Admin Console

Site Settings

Look & Feel Settings

Web Site Theme

Additional Methods for Customizing Projects (DEPRECATED)

Navigation Element Customization (DEPRECATED)

Email Notification Customization

Backup and Maintenance

Administering the Site Down Servlet

Application & Module Inventory

Experiment

Xar Tutorial

XAR Tutorial Sample Files

Describing Experiments in CPAS

Xar.xml Basics

Describing Protocols

Describing LCMS2 Experiments

Overview of Life Sciences IDs

LSID Substitution Templates

Run Groups

Portal

Sub-Inventories

Application Inventory

Module Inventory

Web Part Inventory (Basic Wiki Version)

Web Part Inventory (Expanded Wiki Version)

Collaboration

Create a Collaboration Folder

Issues

Using the Issue Tracker

Administering the Issue Tracker

Messages

Using the Message Board

Administering the Message Board

Contacts

Wiki

Wiki Admin Guide

Wiki User Guide

Wiki Syntax Help

Advanced Wiki Syntax

Embed Live Content in Wikis

Web Part Configuration Properties

Wiki Attachment List

Discuss This

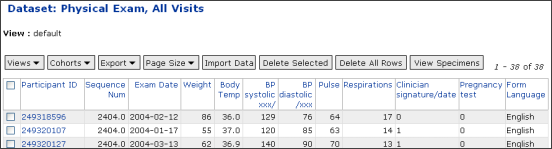

Study

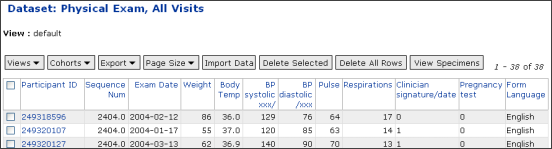

Study Tutorial

Set up the Demo Study

Set up Datasets and Specimens

Sort and Filter Grid Views

Create a Chart

Create an R View

Create an R View with Cairo

Explore Specimens

Overview

Study Adminstrator Guide

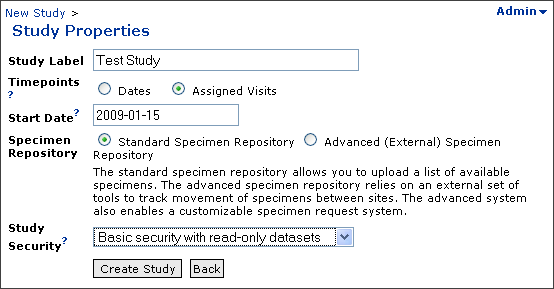

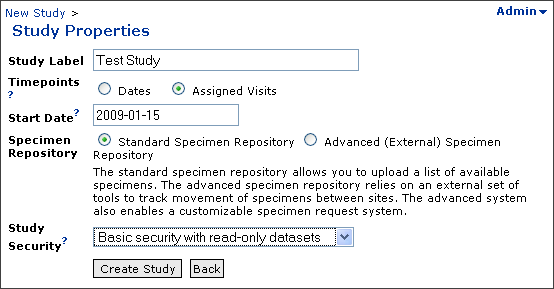

Create a Study

Directly Create Study

Use Study Designer

Import/Export/Reload a Study

Study Import/Export Formats

Manage a Study

Manage Datasets

Manage Visits

Manage Labs and Sites

Manage Cohorts

Manage Study Security

Configure Permissions for Reports & Views

Matrix of Dataset- and Folder-Level Permissions

Manage Views

Define and Map Visits

Advice on Defining Visits

Manually Create and Map Visits

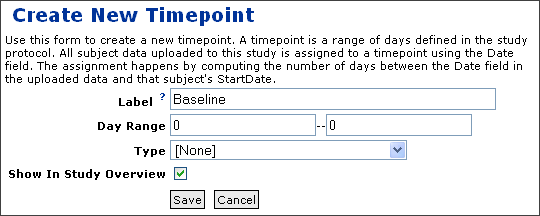

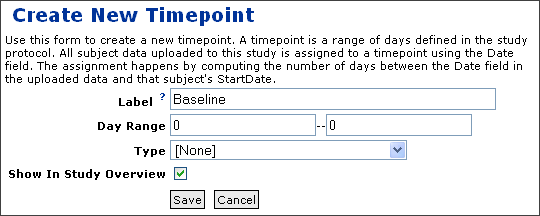

Create a Visit

Edit Visits

Map Visits

Identify Visit Dates

Import Visits and Visit Map

Create and Populate Datasets

Direct Import Pathway

Create a Single Dataset

Create a Single Dataset and Schema

Create Multiple Datasets and Schemas

Dataset Properties

Dataset Schema

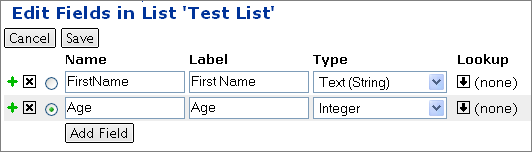

Schema Field Properties

Pre-Defined Schema Properties

Date and Number Formats

Import Data Records

Import via Copy/Paste

Import From a Dataset Archive

Create Pipeline Configuration File

Assay Publication Pathway

Manage Your New Dataset

Set Up, Design & Copy Assays

Manage Specimens

Import a Specimen Archive

Import Specimens Via Cut/Paste

Set Up Specimen Request Tracking

Approve Specimen Requests

Create Reports And Views

Advanced Views

The Enrollment View

Workbook Reports

Annotated Study Schema

Study User Guide

Site Navigation

Study Navigation

The Study Navigator

Selecting, Sorting & Filtering

Reports and Views

Cohorts

Assays

Dataset Import & Export

Dataset Import

Dataset Export

Specimens

Specimen Shopping Cart

Specimen Reports

Wiki User Guide

Accounts and Permissions

Password Reset & Security

Permissions

Your Display Name

Proteomics

Get Started With CPAS

Explore the MS2 Dashboard

Upload MS2 Data Via the Pipeline

Set Up MS2 Search Engines

Set Up Mascot

Set Up Sequest

Install SequestQueue

Set the LabKey Pipeline Root

Search and Process MS2 Data

Configure Common Parameters

Configure X! Tandem Parameters

Configure Mascot Parameters

Configure Sequest Parameters

Sequest Parameters

MzXML2Search Parameters

Examples of Commonly Modified Parameters

Working with MS2 Runs

Viewing an MS2 Run

Customizing Display Columns

Peptide Columns

Protein Columns

Viewing Peptide Spectra

Viewing Protein Details

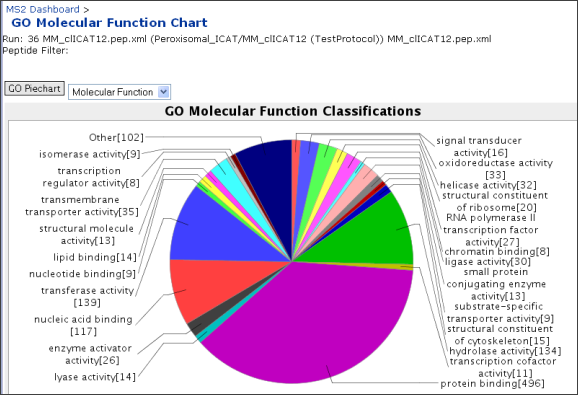

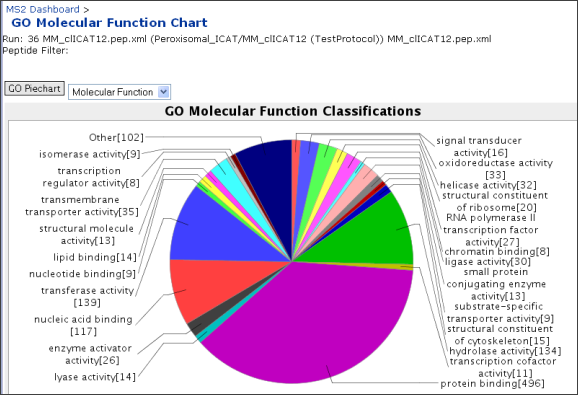

Viewing Gene Ontology Information

Comparing MS2 Runs

Exporting MS2 Runs

Protein Search

Peptide Search

Loading Public Protein Annotation Files

Using Custom Protein Annotations

Using ProteinProphet

Using Quantitation Tools

Experimental Annotations for MS2 Runs

Exploratory Features

caBIG™-certified Remote Access API to LabKey/CPAS

Spectra Counts

Label-Free Quantitation

MS1

MS1 Pipelines

CPAS Team

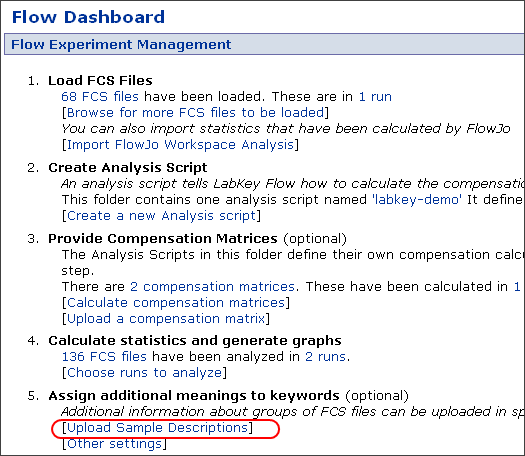

Flow Cytometry

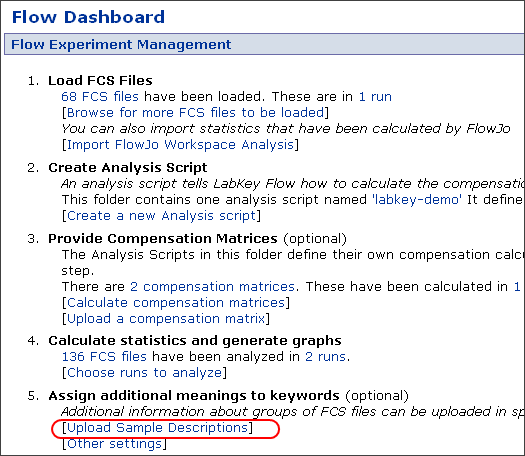

LabKey Flow Overview

Flow Team Members

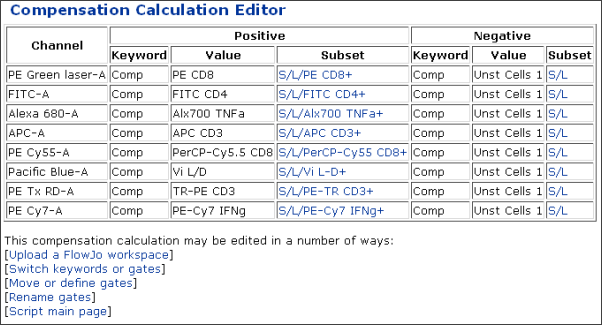

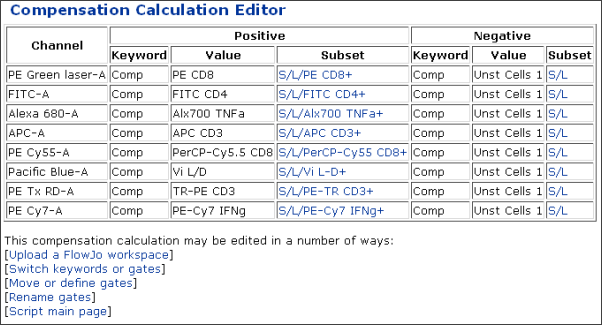

Tutorial: Import a FlowJo Workspace

Install LabKey Server and Obtain Demo Data

Create a Flow Project

Set Up the Data Pipeline and FTP

Place Files on Server

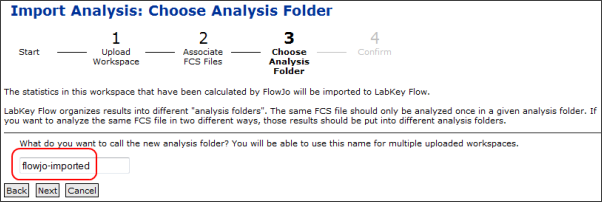

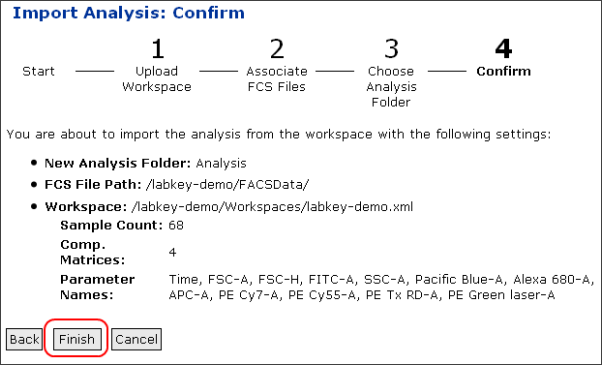

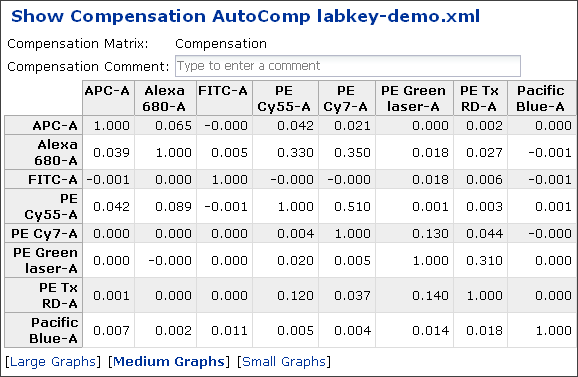

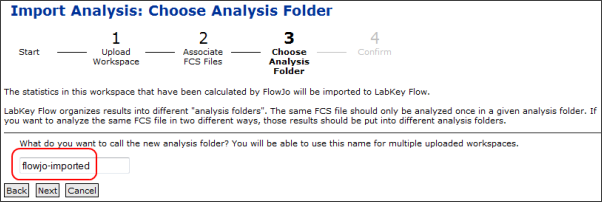

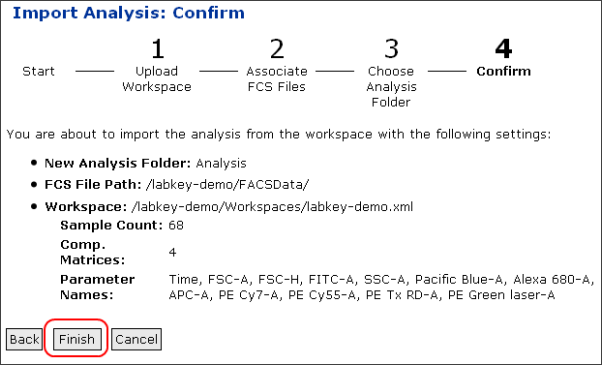

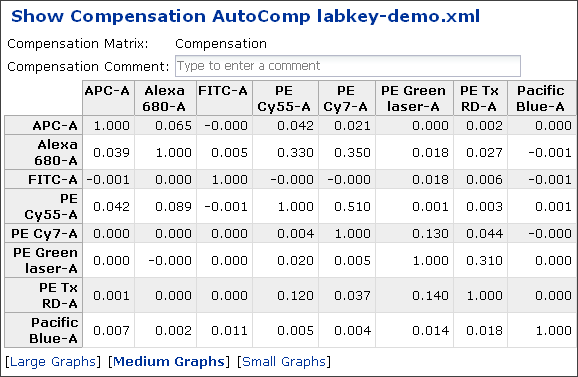

Import a FlowJo Workspace and Analysis

Customize Your View

Examine Graphs

Examine Well Details

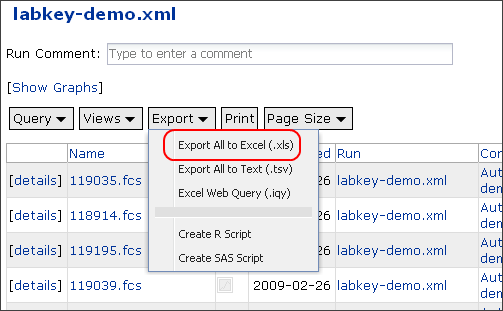

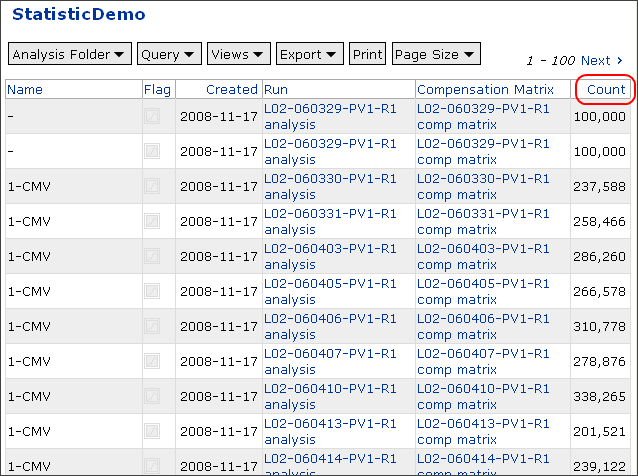

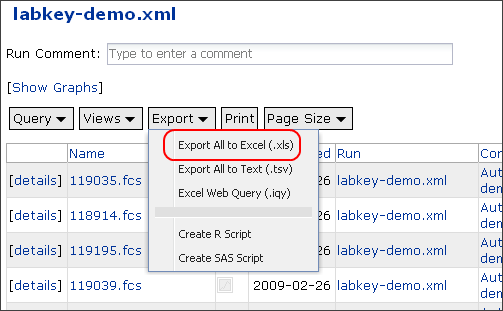

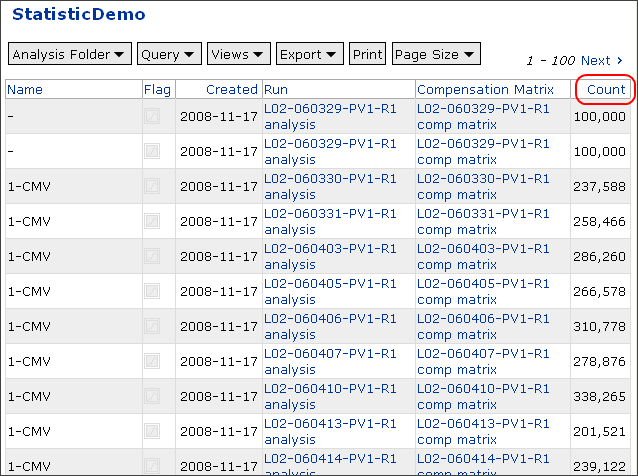

Finalize a Dataset View and Export

Tutorial: Perform a LabKey Analysis

Create Custom Flow Queries

Locate Data Columns of Interest

Add Statistics to FCS Queries

Calculate Suites of Statistics for Every Well

Flow Module Schema

Add Sample Descriptions

Assays

Assay Administrator Guide

Set Up Folder For Assays

Design a New Assay

Property Fields

General Properties

ELISpot Properties

Luminex Properties

Microarray Properties

NAb Properties

Edit Plate Templates

Copy Assay Data To Study

Copy-To-Study History

Tutorial: Import Microarray Data

Install LabKey Server

Create a Microarray Project

Set Up the Data Pipeline and FTP

Assay User Guide

Import Assay Runs

Import General Assays

Import ELISpot Runs

Import Luminex Runs

Luminex Conversions

Import Microarray Runs

Import NAb Runs

Work With Assay Data

Data and Views

Dataset Grid Views

Participant Views

Selecting, Sorting & Filtering

Select Data

Sort Data

Filter Data

Custom Grid Views

Create Custom Grid Views

Select and Order Columns

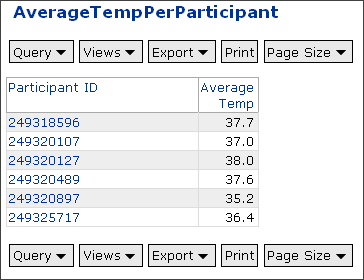

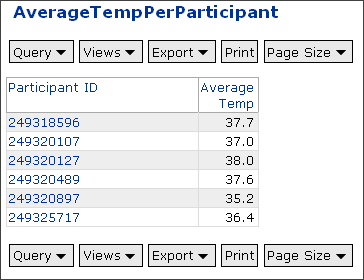

Example: Create a "Joined View" from Multiple Datasets

Pre-Define Filters and Sorts

Save and View Custom Views

Reports and Views

R Views

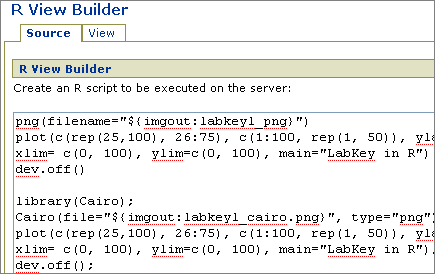

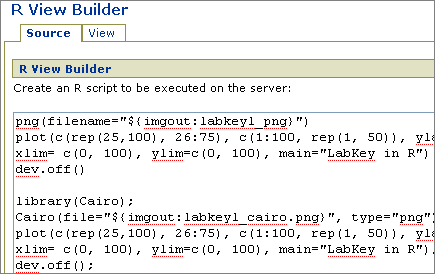

The R View Builder

Author Your First Script

Upload a Sample Dataset

Access Your Dataset

Load Packages

Determine Available Graphing Functions

Graphics File Formats

Use Input/Output Syntax

Work with Saved R Views

Display R View on Portal

Create Advanced Scripts

Means, Regressions and Multi-Panel Plots

Basic Lattice Plots

Participant Charts

User-Defined Functions

R Tutorial Video for v8.1

FAQs for LabKey R

Chart Views

Crosstab Views

Static Reports

Manage Views

Custom SQL Queries

Create a Custom Query

Use the Source Editor

Use the Query Designer

Review Metadata in SQL Source Editor

Display a Query

Add a Calculated Column to a Query

Use GROUP BY and JOIN

Use Cross-Folder Queries

LabKey SQL Reference

Metadata XML

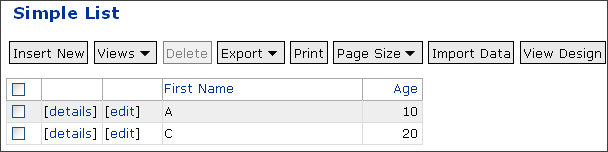

Lists & External Schemas

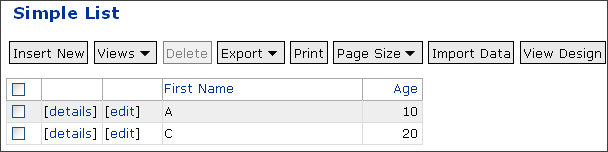

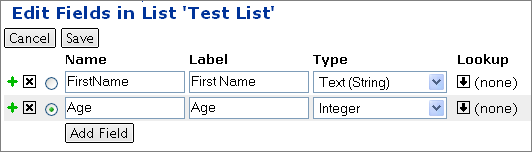

Lists

External Schemas

Search

Files

File Upload and Sharing

Set Up File Sharing

Use File Sharing

Pipeline

Set the LabKey Pipeline Root

Set Up the FTP Server

Upload Pipeline Files via FTP

BioTrue

APIs

Tutorial Video: Building Views and Custom User Interfaces

Client-Side APIs

JavaScript API

Tutorial: JavaScript API

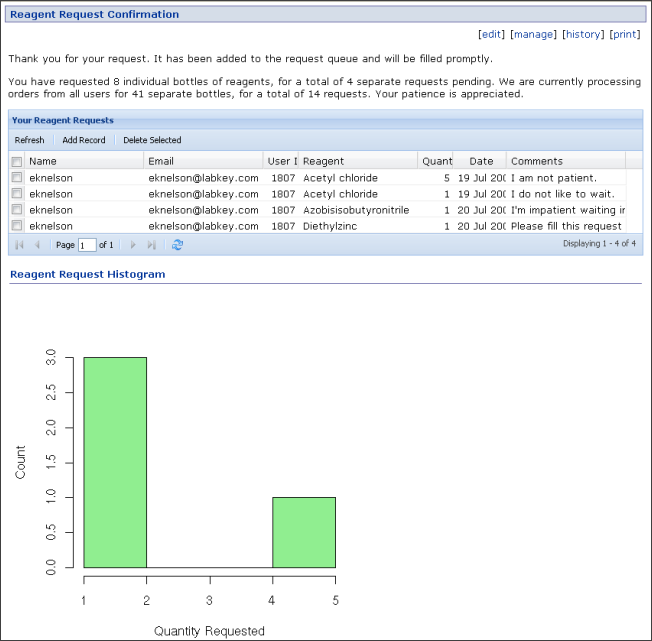

Reagent Request Form

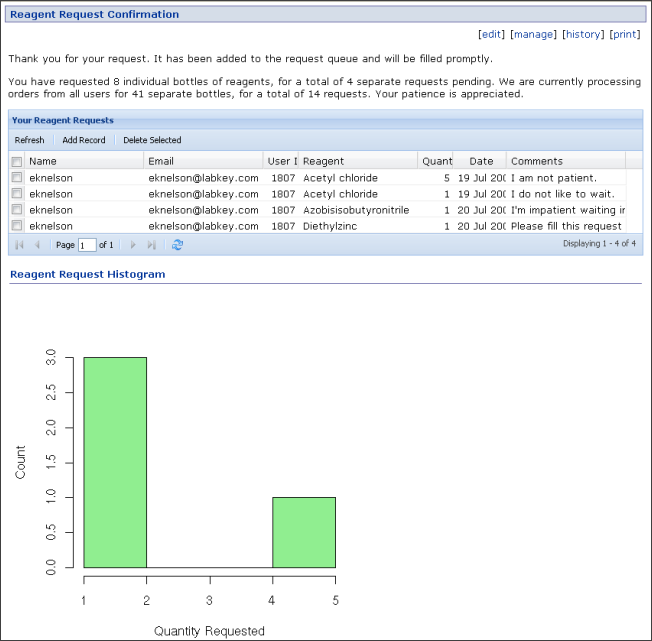

Reagent Request Confirmation Page

Summary Report for Reagent Managers

Licensing for the Ext API

Generate JavaScript

Example: Charts

Generate JSDoc

JavaScript Class List

Java API

Java Class List

R API

SAS API

Setup Steps for SAS

Configure SAS Access From LabKey Server

SAS Macros

SAS Security

SAS Demos

Server-Side APIs

Examples: Controller Actions

Example: Access APIs from Perl

How To Find schemaName, queryName & viewName

Web Part Configuration Properties

Implementing API Actions

Programmatic Quality Control

Using Java for Programmatic QC Scripts

Developer Documentation

Recommended Skill Set

Setting up a Development Machine

Notes on Setting up a Mac for LabKey Development

Machine Security

Enlisting in the Version Control Project

Source Code

Confidential Data

Development Cycle

Project Process

Release Schedule

Issue Tracking

Submitting Contributions

Checking Into the Source Project

Developer Email List

Wiki Documentation Tools

The LabKey Ontology & Query Services

Building Modules

Third-party Modules

Module Architecture

Simplified Modules

Queries, Views and Reports in Modules

Assays defined in Modules

Getting Started with the Demo Module

Creating a New Module

Deprecated Components

The LabKey Server Container

CSS Design Guidelines

Creating Views

Maintaining the Module's Database Schema

Integrating with the Pipeline Module

Integrating with the Experiment Module

GWT Integration

GWT Remote Services

UI Design Patterns

Feature Owners

LabKey Server and the Firebug add-on for Firefox

Start Here

Get Started With LabKey Server 9.1

- Administrators + Potential Adopters

- Users

- Developers

Version 9.1 Improvements

Training Materials

Still Have Questions?

- Search the documentation. Use the Search box in the upper right corner of this page.

- Search the community forums. Each forum has a search box on its upper right side.

- Obtain commercial support. LabKey Corporation provides consulting services to users who need assistance installing, enhancing and maintaining the LabKey Server platform in a production setting. Email info@labkey.com for further information.

- Review documentation archive. See Documentation for LabKey Versions 1.1-8.3

Future Directions for LabKey Server

Install the Server

This section explains how to install or upgrade LabKey Server.

Topics:

Access the Server

Log InMost LabKey projects are secured to protect the data they contain, so you will want to log in to access your projects. Depending on how LabKey is set up for your organization, you may be able to log in using your network user name and password, or you have have to request a LabKey account. If you're not sure, ask your administrator. He or she can create an account for you if you don't already have one, and also grant you project permissions as needed.

Once you've logged in, you can edit your account information by clicking on the My Account link in the upper right corner of any page.

Supported Browsers

LabKey is a web application that runs in your web browser. To access LabKey, you must use a web browser that LabKey supports.

- On Windows, you can use either Microsoft Internet Explorer or Mozilla Firefox.

- On Unix-based systems, use Firefox. The older Mozilla browser may also work, but it is not technically supported for use with LabKey.

- On the Macintosh, you must use Firefox to access LabKey. Other popular Mac browsers like Safari and Internet Explorer have serious problems with JavaScript, which is required for some key features of LabKey.

Set Up a Folder & its Tools

Learn User Basics

Prerequisites: Before you use LabKey Server, your Admin must

Install your server and

Set up your workspace.

Basic Activities

Specialized Activities

Read more about the

LabKey Applications you expect to use.

Explore

LabKey Modules. LabKey Modules can be added to LabKey Applications to extend their functionality. A few of the modules you may use:

Advanced Activities

Learn Admin Basics

Overview

[Community Forum]

Administrative features provided by LabKey Server include:

- Project organization, using a familiar folder hierarchy

- Role-based security and user authentication

- Dynamic web site management

- Backup and maintenance tools

Documentation Topics

Set Up Your Server

Maintain Your Server

Extend LabKey Server

Learn What's New in 9.1

Version 9.1 represents a important step forward in the ongoing evolution of the open source LabKey Server. Enhancements in this release are designed to:

- Support leading medical research institutions using the system as as a data integration platform to reduce the time it takes for laboratory discoveries to become treatments for patients

- Provide rapid to deploy software infrastructure for communities pursing collaborative clinical research efforts

- Deliver a secure data repository for managing and sharing laboratory data with colleagues, such as for proteomics, microarray, flow cytometry or other assay-based data.

New capabilities introduced in this release are summarized below.

For a full query listing all improvements made in 9.1, see:

Items Completed in 9.1. Refer to

9.1 Upgrade Tips to work around minor behavior changes associated with upgrading from v8.3 to v9.1.

Download LabKey Server v 9.1.

Quality Control

- Field-level quality control. Data managers can now set and display the quality control (QC) status of individual data fields. Data coming in via text files can contain the special symbols Q and N in any column that has been set to allow quality control markers. “Q” indicates a QC has been applied to the field, “N” indicates the data will not be provided (even if it was officially required).

- Programmatic quality control for uploaded data. Programmatic quality control scripts (written in R, Perl, or another language of the developer's choice) can now be run at data upload time. This allows a lab to perform arbitrary quality validation prior bringing data into the database, ensuring that all uploaded data meets certain initial quality criteria. Note that non-programmatic quality control remains available -- assay designs can be configured to perform basic checks for data types, required values, regular expressions, and ranges in uploaded data.

- Default values for fields in assays, lists and datasets. Dataset schemas can now be set up to automatically supply default values when imported data tables have missing values. Each default value can be the last value entered, a fixed value or an editable default.

- Display of assay status. Assay working folders now clearly display how many samples/runs have been processed for each study.

- Improved study integration. Study folders provide links to view source assay data and designs, as well as links to directly upload data via appropriate assay pipelines.

- Hiding of unnecessary "General Purpose" assay details. Previously, data for this type of assay had a [details] link displayed in the copied dataset. This link is now suppressed because no additional information is available in this case.

- Easier data upload. Previously, in order to add data to an assay, a user needed to know the destination folder. Now users are presented with a list of appropriate folders directly from the upload button either in the assay runs list or from the dataset.

- Improved copy to study process. It is now easier to find and fix incorrect run data when copying data to a study. Improvements:

- Bad runs can now be skipped.

- The run details page now provides a link so that run data can be examined.

- There is now an option to re-run an assay run, pre-populating all fields, including the data file, with the previous run. On successful import, the previous run will be deleted.

- Protein Search Allows Peptide Filtering. When performing a protein search, you can now filter to show only proteins groups that have a peptide that meets a PeptideProphet probability cutoff, or specify an arbitrarily complex peptide filter.

- Auto-derivation of samples during sample set import. Automated creation of derivation history for newly imported samples eases tracking of sample associations and history. Sample sets now support an optional column that provides parent sample information. At import time, the parent samples listed in that column are identified within LabKey Server and associations between samples are created automatically.

- Microarray bulk upload.

- When importing MageML files into LabKey Server, users can now include a TSV file that supplies run-level metadata about the runs that produced the files. This allows users to reuse the TSV metadata instead of manually re-entering it.

- The upload process leverages the Data Pipeline to operate on a single directory at a time, which may contain many different MageML files. LabKey Server automatically matches MageML files to the correct metadata based on barcode value.

- An Excel template is provided for each assay design to make it easier to fill out the necessary information.

- Microarray copy-to-study. Microarray assay data can now be copied to studies, where it will appear up as an assay-backed dataset.

- Support for saving state within an assay batch/run upload. Previously, once you started upload of assay data, you had to finish at one point in time. Now you can start by uploading an assay batch, then upload the run data later.

- NAb improvements:

- Auto-complete during NAb upload. This is available for specimen, visit, and participant IDs.

- Re-run of NAb runs. After you have uploaded a NAb run and you wish to make an edit, you can redo the upload process with all the information already pre-filled, ready for editing.

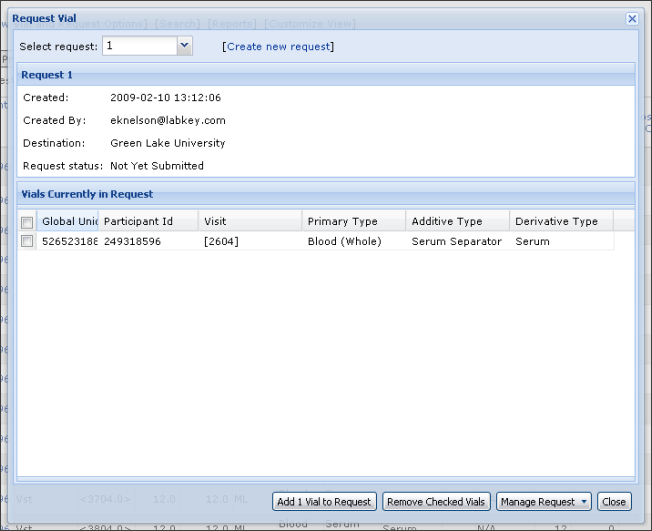

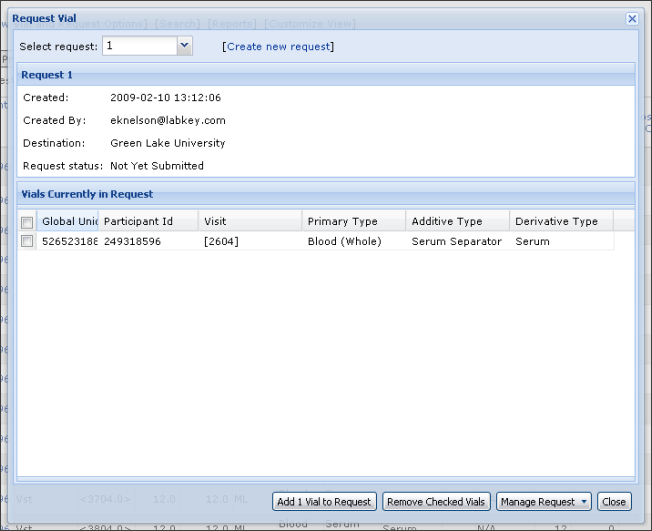

- Specimen shopping cart. When compiling a specimen request, you can now perform a specimen search once, then build a specimen request from items listed in that search. You can add individual vials one-at-a-time using the "shopping cart" icon next to each vial. Alternatively, you can add several vials at once using the checkboxes next to each vial and the actions provided by the "Request Options" drop-down menu. After adding vials to a request of your choice, you return to your specimen search so that you can add more.

- Auditing for specimen comments. Specimen comments are now logged, so they can be audited.

- Specimen reports can now be based on filtered vial views. This increases the power of reporting features.

- Enhanced interface for managing views. The same interface is now used to manage views within a study and outside of a study.

- Container filters for grid views. You can now choose whether the list of "Views" for a data grid includes views created within the current folder or both the current folder and subfolders.

- Ability to clear individual columns from sorts and filters for grid views. The "Clear Sort" and "Clear Filter" menu items area available in the sort/filter drop-down menu available when you click on a grid view column header. For example, the "Clear Sort" menu item is enabled when the given column is included in the current sort. Selecting that item will remove just that column from the list of sorted columns, leaving the others intact.

- More detailed information for the "Remember current filter" choice on the Customize View page. When you customize a grid view that already contains sorts and filters, these sorts and filters can be retained with that custom view, along with any sorts and filters added during customization. The UI now explicitly lists the pre-existing sorts and filters that can be retained.

- Stand-alone R views. You do not need to associate every R view with a particular grid view. R views can be created independently of a particular dataset through the "Manage Views" page.

- Improved identification of views displayed in the Reports web part. The Reports web part now can accept string-based form of report ID (in addition to normal integer report ID) so that you can refer to a report defined within a module.

- Ability to download a single FCS file. A download link is now available on the FCS File Details page.

- New Documentation: Demo, Tutorial and additional Documentation

- Richer filter UI for "background column and value." Available in the ICS Metadata editor. This provides support for "IN" and multiple clauses. Example: Stim IN ('Neg Cont', 'negctrl') AND CD4_Count > 10000 AND CD8_Count > 10000

- Performance improvements. Allow loading larger FlowJo workspaces than previously possible.

- UI improvements for FlowJo import. Simplify repeated uploading of FlowJo workspaces.

- New SAS Client API. The LabKey Client API Library for SAS makes it easy for SAS users to load live data from a LabKey Server into a native SAS dataset for analysis, provided they have permissions to read those data. It also enables SAS users to insert, update, and delete records stored on a LabKey Server, provided they have appropriate permissions to do so. All requests to the LabKey Server are performed under the user's account profile, with all proper security enforced on the server. User credentials are obtained from a separate location than the running SAS program so that SAS programs can be shared without compromising security.

- Additions to the Java, JavaScript, R and SAS Client Libraries:

- Additions to the Javascript API:

- Callback to indicate that a web part has loaded. Provides a callback after a LABKEY.WebPart has finished rendering.

- Information on the current user (LABKEY.user). The LABKEY.Security.currentUser API exposes limited information on the current user.

- API/Ext-based management of specimen requests. See: LABKEY.Specimen.

- Sorting and filtering for NAb run data retrieved via the LabKey Client APIs. For further information, see: LABKEY.Assay#getNAbRuns

- Ability to export tables generated through the client API to Excel. This API takes a JavaScript object in the same format as that returned from the Excel->JSON call and pops up a download dialog on the client. See LABKEY.Utils#convertToExcel.

- Improvements to the Ext grid.

- Quality control information available.

- Performance improvements for lookup columns.

- Documentation for R Client API. Available here on CRAN.

- File-based modules. File-based modules provide a simplified way to include R reports, custom queries, custom query views, HTML views, and web parts in your modules. You can now specify a custom query view definition in a file in a module and it will appear alongside the other grid views for the given schema/query. These resources can be included either in a simple module with no Java code whatsoever, or in Java-based modules. They can be delivered as a unit that can be easily added to an existing LabKey Server installation. Documentation: Overview of Simplified Modules and Queries, Views and Reports in Modules.

- File-based assays. A developer can now create a new assay type with a custom schema and custom views without having to be a Java developer. A file-based assay consists of an assay config file, a set of domain descriptions, and view html files. The assay is added to a module by placing it in an assay directory at the top-level of the module. For information on the applicable API, see: LABKEY.Experiment#saveBatch.

- Support for additional SQL functions:

- UNION and UNION ALL

- BETWEEN

- TIMESTAMPDIFF

- Cross-container queries. You can identify the folder containing the data of interest during specification of the schema. Example: Project."studies/001/".study.demographics.

- Query renaming. You can now change the name of a query from the schema listing page via the “Edit Properties” link.

- Comments. Comments that use the standard SQL syntax ("--") can be included in queries.

- Metadata editor for built-in tables. This editor allows customization of the pre-defined tables and queries provided by LabKey Server. Users can change number or date formats, add lookups to join to other data (or query results), and change the names and description of columns. The metadata editor shows the metadata associated with a table of interest and allows users to override default values. Edits are saved in the same XML format used to describe custom queries.

- Version comparison tool for wiki pages. Differences between older and newer versions of wiki pages can now be easily visualized through the "History"->"Compare Versioned Content"->"Compare With" pathway.

- Attachments can now be downloaded from the "Edit" page. Also, if an attachment is an image, clicking on it displays it in a new browser tab.

- Tomcat 5.5.27 is now supported.

- Upgrade to PostgresSQL 8.3 is now strongly encouraged. For anyone running PostgreSQL 8.2.x or earlier, you will now see a yellow warning message in the header when logged in as a system admin. Upgrade to PostgreSQL 8.3 to eliminate the message. The message can also be hidden. Upgrade documentation.

9.1 Upgrade Tips

PostgreSQL 8.3 Upgrade Tip for Custom SQL Queries

Problem. After upgrading to PostgreSQL 8.3, some custom SQL queries may generate errors instead of running. An example of an error message you might observe:

Query 'Physical Exam Query' has errors

java.sql.SQLException: ERROR: operator does not exist: character varying = integer

Solutions: Two Options.

1. Use the Query Designer. If your query is simple enough for viewing in the Query Designer:

- View your query in the Query Designer.

- Save your query. The Query Designer will make the adjustments necessary for compatibility with PostgreSQL 8.3 automatically.

- Your query will now run instead of generating an error message.

2. Use the Source Editor. If your query is too complicated for viewing in the Query Designer:

- Open it in the Source Editor.

- In the query editor, add single quotes around numbers so that they will be saved appropriately. For example, change

WHERE "Physical Exam".ParticipantId.ParticipantId=249318596

to:

WHERE "Physical Exam".ParticipantId.ParticipantId='249318596'

- Your query will now run instead of generating an error message.

Cause. As of LabKey Server v9.1, the Query Designer uses column types in deciding how to save comparison values. In versions of LabKey Server pre-dating v9.1, an entry such as 1234 became 1234 regardless of whether the column type was string or numeric. In LabKey Server v9.1, the Query Designer saves 1234 as '1234' if appropriate. Older queries need to be resaved or edited manually to make this change occur.

Learn What's New in 9.2

Overview

LabKey Server v 9.2 has not yet been released. This feature list provides a preview of the release.

Version 9.2 represents a important step forward in the ongoing evolution of the open source LabKey Server. Enhancements in this release are designed to:

- Support leading medical research institutions using the system as as a data integration platform to reduce the time it takes for laboratory discoveries to become treatments for patients

- Provide rapid to deploy software infrastructure for communities pursing collaborative clinical research efforts

- Deliver a secure data repository for managing and sharing laboratory data with colleagues, such as for proteomics, microarray, flow cytometry or other assay-based data.

New capabilities introduced in this release are summarized below.

For an exhaustive list of all improvements made in 9.2, see:

Items Completed in 9.2. Refer to the

9.2 Upgrade Tips to quickly identify behavioral changes associated with upgrading from v9.1 to v9.2.

After 9.2 is released: Download LabKey Server v 9.2.

User administration and security

Finer-grained permissions settings for administrators

- Tighter security. Admins can now receive permissions tightly tailored to the subset of admin functions that they will perform. This allows site admins to strengthen security by reducing the number of people who possess broad admin rights. For example, "Specimen Requesters" can receive sufficient permissions to request specimens without being granted folder administration privileges.

- New roles. LabKey Server v9.2 includes four entirely new roles: "Site Admin," "Assay Designer," "Specimen Coordinator" and "Specimen Requester." This spreadsheet shows a full list of the new admin roles and the permissions they hold. It also shows roles that may be added in future releases of LabKey Server.

Improved permissions management UI

- Brief list of roles instead of long list of groups. Previously, the permissions management interface displayed a list of groups and allowed each group to be assigned a role. This list became hard to manage when the list of groups grew long. Now security roles are listed instead of groups, so the list is brief. Groups can be assigned to these listed roles or moved between roles.

- Rapid access to users, groups and permission settings. Clicking on a group or user brings up a floating window that shows the assigned roles of that group or user across all folders. You can also view the members of multiple groups by switching to the groups tab.

Assignment of individual users to roles

- Now individual users, not just groups, can be assigned to security roles. This allows admins to avoid creating groups with single members in order to customize permissions.

Site Users list is a grid view

- This allows customization and export of the view.

Custom permission reporting

- Administrators can create custom lists to store metadata about groups by joining a list with groups data. Any number of fields can be added to information about a each user or group. These lists can be joined to:

- Built in information about the user (name, email etc)

- Built in information about the group (group, group members)

- The results can also be combined with built-in information about roles assigned to each user & group in each container. From this information a variety of reports can be created, including group membership for every user and permissions for every group in every container.

- These reports can be generated on the client and exported as Excel Spreadsheets

Improved UI for Deleting, Deactivating and Re-activating Users

- Deactivate/Re-Activate buttons are now on the user details page as well as the user list. When clicked on the user list, a confirmation page is shown listing all the selected users (users that are already activate/inactive are filtered out if action is deactivate/re-activate).

- Clicking Delete on the user list now takes you to a confirmation page much like the deactivate/re-activate users command. If at least one of the selected users is active, it will also include a note and button that encourages the admin to deactivate the user(s) rather than permanently delete them.

Study

Study export, import and reload

- Studies can be reloaded onto the same server or onto a different LabKey Server. This makes it easy to transfer a study from a staging environment to a live LabKey platform.

- You can populate a brand new study with the exported contents of an existing study. For similar groups of studies, this helps you leverage your study setup efforts.

- Studies can be set up to reloaded data from a data depot nightly. This allows regular transfer of updates from a remote, master database to a local LabKey Server. It keeps the local server up-to-date with the master database automatically.

Customizable "Missing Value" indicators

- Field-Level Missing Value (MV) Indicators allow individual data fields to be flagged. Previously, only two MV values were allowed (N and Q). Administrators can now customize which MV values are available. A site administrator can customize the MV values at the site level and project administrators can customize the MV values at the folder level. If no custom MV values are set for a folder, they will be inherited from their parent folder. If no custom values are set in any parent folders, then the MV values will be read from the server configuration.

- MV value customization consists of creating or deleting MV values, plus editing their descriptions.

- A new API allows programmatic configuration of MV values for a folder. This allows study import/export to include MV values in its data and metadata.

"Missing Value" user interface improvements

- MV values are now displayed with a pop-up and a MV indicator on an item’s detail page.

- When inserting or updating an item with a MV-enabled field, possible MV values are now offered in a drop-down, along with the ability to set a raw value for the field. Currently a user is only able to specify one or the other on the update page.

Specimens

Import of specimen data allowed before completion of quality control (QC)

- Specimen import is now more lenient in the conflicts it allows in imported specimen data. Previously, import of the entire specimen archive was disallowed if conflicts were detected between transaction records for any individual vial. In 9.2, all fields with conflicts between vials are marked "NULL" and the upload is allowed to complete.

- Use a saved, custom view that filters for vials with the "Quality Control Flag" marked "True" in order to identify and manage vials that imported with conflicts.

Visual flagging of all questionable vials and primary specimens

- Vial events with conflicting information are flagged. Conflicts are differentiated by the presence of an "unknown" value for the conflicting columns, plus color highlighting. For example, you would see a flag when an imported specimen's globalUniqueID is associated with more than one primary type, as could occur if a clinic and repository entered different vial information pre- and post-shipment.

- Vial events that indicate a single vial is simultaneously at multiple locations are flagged. This can occur in normal operations when an information feed from a single location is delayed, but in other cases may indicate an erroneous or reused globalUniqueID on a vial.

- Vials or primary specimens that meet user-specified protocol-specific criteria are flagged. Examples of QC problems that could be detected with this method include:

- A saliva specimen present in a protocol that only collects blood (indicating a possibly incorrect protocol or primary type).

- Primary specimen aliquoted into an unexpectedly large number of vials, based on protocol expectations for specimen volume (indicating a possibly incorrect participantID, visit, or type for one or more subset of vials).

Built-in report for mismatched specimens.

- The new "specimencheck" module identifies mismatched specimens and displays them in a grid view. It identifies specimens whose participantID, sequenceNum and/or visit dates fail to match, then produces a report that can be used to perform quality control on these specimens. For developers, the "specimencheck" module also provides an example of a simple file-based module.

Manual addition/removal of QC flags

- This allows specimen managers to indicate that a particular quality control problem has been investigated and resolved without modification of the underlying specimen data.

- A specimen manager can also manually flag vials as questionable even if they do not meet any of the previously defined criteria.

- Records of manual flagging/unflagging are preserved over specimen imports, in the same manner as specimen comments.

Blank columns eliminated from Excel specimen reports

- When exported to Excel, individual worksheets of specimen reports may include blank columns. This is due to the fact that columns are included for all visits that have specimens of any kind, rather than for just those visits with specimens matching the current worksheet’s filter. Exported Excel files now display a minimal set of visit columns per report worksheet.

Additional vial count columns available in vial views

- Additional columns can be optionally presented in vial view and exported via Excel. These include the number of sibling vials currently available, locked in requests, currently at a repository and expected to become available, plus the total number of sibling vials.

- These columns are available via the ‘customize view’ user interface, so different named/saved views can be created. The built-in ability to save views per user enables specimen coordinators to see in-depth detail on available counts, while optionally presenting other users with a more minimal set of information.

Performance

- Faster loading of specimen queries. Please review the 9.2 Upgrade Tips to determine whether any of your queries will need to be updated to work with the refactored specimen tables.

Specimen report improvements

- New filter options are available for specimen reports. You can now filter on the presence or absence of a completed request.

Assays

Validation and Transform Scripts

- Both transformation and validation scripts (written in Perl, R or Java) can now be run at the time of data upload. A validation script can reject data before acceptance into the database if the data do not meet initial quality control criteria. A data transformation script can to inspect an uploaded data file and modify the data or populate empty columns that were not provided in the uploaded data. For example, you can populate a column calculated from other columns or flag out-of-range values.

- Validation support has been extended to NAb, Luminex, Microarray, ELISpot and file-based assay types. Validation is not supported for MS2 and Flow assays.

- A few notes on usage:

- Columns populated by transform scripts must already exist in the assay definition.

- Executed scripts show up in the experimental graph, providing a record that transformations and/or quality control scripts were run.

- Transform scripts are run before field-level quality control. Sequence: Transform, field-level quality control, programmatic quality control

- A sample script and details on how to write a script are currently available in the specification.

Specimen IDs provide lookups to study specimens

- For an assay, a specimenID that doesn't appear in a study is displayed with a red highlight to show the mismatch in specimenID and participantID. GlobalUniqueIDs are matched within a study, not between studies.

NAb Improvements

- The columns included in the "Run Summary" section of the NAb "Details" page can be customized. If there is a custom run view named "CustomDetailsView", the column set and order from this view will apply to NAb run details view.

- Significant performance enhancements. For example, switching from a run to a print view is much faster.

- Users with read permissions on a dataset that has been copied into the study from a NAb assay now see an [assay] link that leads to the "Details" view of a NAb assay.

New tutorial for Microarrays

Proteomics

Proteomics metadata collection

- The way that users enter proteomics run-level metadata has been improved and bulk-import capabilities have been added. The same approach used for specifying expected properties for other LabKey assays is now used for proteomics.

Proteomics-Study integration

- It is now possible to copy proteomics run-level data to a study dataset, allowing the proteomics data to be integrated with other study datasets. Note that the study dataset links back to the run that contains the metadata, not the search results.

Protein administration page enhanced

- A new utility on the protein administration page allows you to test parsing a FASTA header line

Views

Filter improvements

- A filter notification bar now appears above grid views and notes which filters that have been applied to the view.

- The links above an assay remember your last filter. This helps you avoid reapplying the filter. For example, if you have applied a filter to the view, the filter is remembered when you switch between batches, runs and results. The filter notification bar above the view shows the filters that remain with the view as you switch between batches, runs and results.

File management

WebDAV UI enhancements provide a user-friendly experience

- Users can browse the repository in a familiar fashion similar to the Windows Explorer, upload files, rename files, and delete files. All these actions are subject to permission checking and auditing. Drag and drop from desktop and multi-file upload with progress indicator are supported. Additional information about the files is displayed, such as the date of file creation or records of file import into experiments.

Flow

Flow Dashboard UI enhancements

- These changes provide a cleaner set of entry points for the most common usages of Flow. The advanced features of the current Flow Dashboard remain easily accessible. Changes include:

- More efficient access to flow runs

- Ability to upload FCS files and import FlowJo workspaces from a single page.

New Tutorial

Custom SQL Queries

New SQL functions supported

- COUNT(*)

- SELECT Table.*

- HAVING

- UNION in subqueries

- Parentheses in UNION and FROM clauses

Client API

New Tutorial and Demo for LabKey JavaScript APIs

New JavaScript APIs

- LABKEY.Query.exportSql. Accepts a SQL statement and export format and returns an exported Excel or TSV file to the client. The result set and the export file are generated on the server. This allows export of result sets over over 15,000 rows, which is too much for JavaScript to parse into objects on the client.

- LABKEY.QueryWebPart. Supports filters, sort, and aggregates (e.g., totals and averages). Makes it easier to place a Query Web Part on a page.

- LABKEY.Form. Utility class for tracking the dirty state of an HTML class

- LABKEY.Security Expanded. LABKEY.Security provides a range of methods for manipulating and querying security settings. A few of the new APIs:

- LABKEY.Security.getGroupsForCurrentUser. Reports the set of groups in the current project that includes the current user as a member.

- LABKEY.Security.ensureLogin. A client-side function that makes sure that the user is logged in. For example, you might be calling an action that returns different results based on the user's permissions, like what folders are available or setting a container filter.

- Enhanced LABKEY.Security.getUsers. Now includes users' email addresses as the "email" property in the response.

New Java APIs

- The Java library now includes programmatic access to NAb data.

Generate a JavaScript, R or SAS script from a filtered grid view

- A new menu option under the "Export" button above a grid view will generate a valid script that can recreate the grid view. For example, you can copy-and-paste generated JavaScript into a wiki page source or an HTML file to recreate the grid view. Filters that have been applied to the grid view that are shown in the filter bar above the view are included in the script.

Collaboration

Customization of the “Issues” label

- The issues module provides a convenient tracking service, but some of the things one might want to track with this service are best described by titles other than “issues.” For example, one might use the issues module to track “requests,” “action items,” or “tickets.”

- Administrator can now modify the label displayed in the issue module’s views. The admin can specify a singular and plural form of the new label on a per-container basis. In most places in the UI where either term "Issue" or "Issues" is used, these configured values are used instead. The only exceptions to this are the name of the issues module when displayed in the admin console and folder customization, and the name of the controller in URLs.

Wiki enhancements

- Attachments

- A new option to hide the list of page attachments is available. Files attached to wiki pages are currently displayed below the page content, even if those attachments. This is undesirable in cases where the attachments are simply images used within the page content itself.

- When wiki attachments are displayed, a file attachment divider is shown by default. CSS allows the text associated with the divider to be hidden.

- HTML Editor

- The wiki HTML editor has been updated to a newer version.

- The button for manipulating images is now enabled in the Visual Editor.

- Spellcheck is enabled on Firefox (but not IE).

- Print. You can now print a subtree of a wiki page tree.

Support for tabs in text areas

- Forms where you enter code and want to format it nicely. This includes the Wiki and query SQL editors.

- Forms where you enter TSV. This includes sample set, list, dataset, and custom protein annotation uploads.

- Support for simple tab entry, as well as multi-line indent and outdent with shift-tab.

Message expiration

- Expiration of messages is now "Off" by default for newly created message boards. Existing message boards remain as they are.

Administration

PostgreSQL

- Support for PostgreSQL 8.4 Beta 1.

9.2 Upgrade Tips

Specimen Queries

The "Specimens" table has been split into two new tables, "Vials" and "Specimens," to enhance query speed. This means that you will need to reference one additional table when you use the raw specimen tables perform a lookup.

Queries that use the raw specimen tables will need to be updated. However, queries that use the special, summary tables (Specimen Detail and Specimen Summary) are unaffected and do not need to be modified.

Example: A 9.1 query would have referenced the PrimaryType of a vial as follows:

SpecimenEvent.SpecimenId.PrimaryType

A 9.2 version of the same query would reference the PrimaryType using "VialId," a column in the new "Vials" table:

SpecimenEvent.VialId.SpecimenId.PrimaryType

The Vial table contains: rowID (of the specimen transaction record), globalUniqueID (of the vial), volume and specimenID. The Specimen table contains: participantID, visit number, date, primary type and rowIDs (of the vials generated from this specimen).

Upgrade Note: If you have changed your specimen database using PgAdmin, you may have problems during upgrade. Please see a member of the LabKey team for assistance if this is the case.

Specimen Import

Specimen import is now more lenient in the conflicts it allows in imported specimen data. Previously, import of the entire specimen archive was disallowed if conflicts were detected between transaction records for any individual vial. In 9.2, all fields with conflicts between vials are marked "NULL" and the upload is allowed to complete.

Use a saved, custom view that filters for vials with the "Quality Control Flag" marked "True" in order to identify and manage vials that imported with conflicts.

Example: In 9.1, a vial with a single globalUniqueSpecimenID was required to have the same type (blood, saliva, etc.) for all transactions. Vials that listed different types in different reaction records prevented upload of the entire archive. In 9.2, the conflicting type fields would be marked "NULL" such that these vials and their problematic fields can be reviewed and corrected after upload.

PostgreSQL 8.3

PostgreSQL 8.2 and 8.1 are unsupported on LabKey Server 9.2 and beyond, so you will need to Upgrade PostgreSQL.

Security Model

Extensive changes have been made to the security model in LabKey Server 9.2. Please see the Permissions and Roles spreadsheet for a detailed mapping of permissions under the old model to permissions under the new.

View Management

For 9.2, the "Manage Views" page is accessible to admins only. This means that nonadmins cannot delete or rename views of their own creation, as they could previously. Delete/rename ability will be restored for nonadmins in a future milestone.

MS2 Metadata Collection

The metadata collection process for mass spec files has been replaced. It is now based on the assay framework.

Wiki Attachments

Authors of wiki pages now have the option to show or hide the list of attachments that is displayed at the end of a wiki page. If displayed, the list of attachments will now appear under a bar that reads "File Attachments." This bar helps distinguish the attachment list from the page list. For portal pages where display of this bar is undesirable, you can use CSS to hide the bar.

Quality Control (QC)

The "QC Indicator" field is now called the "Missing Value" field.

Folder/Project Administration UI

The "Manage Project" menu under the "Admin" dropdown on the upper right (and on the left navigation bar) has changed. The new menu options available under "Manage Project" are:

- Permissions (For the folder or project-- you can navigate around the project/folder tree after you get there)

- Project Users (Equivalent to the old "Project Members" option)

- Folders (Same as the current "Manage Folders," focused on current folder)

- Project Settings (Same as existing option of the same name, always available for the project)

- Folder Settings (Available if the container of interest is a folder. Equivalent to the old "Customize Folder." Allows you to set the folder type and choose missing value indicators)

Tutorials and Online Demos

Webinars and Videos

Roadmap for the Future

LabKey Roadmap

Mission: Build the leading platform for storing, analyzing, integrating and securely sharing high throughput laboratory and study data.What that means to us

- LabKey Server should be the first choice for data storage, sharing and integration for any lab looking to move beyond simple file-based storage and analysis.

- LabKey Server should be scalable to any organization with large quantities of assay data.

- LabKey server should be extensible to new experimental and analysis techniques.

Where we need to go

The main focus areas going forward are

- Improved depth and breadth of assay support.

- Improved study support with an emphasis on data integration and analysis.

- Improved Ease of use.

- Easy extensibility.

- CFR 21 Part 11b compliance

Each of these areas is covered in some more detail below.

Improved Depth and Breadth of Assay Support

This is divided up into several sub-areas

- Improvements to the core MS2 and flow assays

- Improvements to general purpose assay toolkit (GPAT)

- Support for specific assays based on GPAT

Continued improvement in core assays

The core assays supported LabKey, and the original reasons for the success of the platform are MS2-based proteomics and Flow Cytometry. It is important to keep these areas up to date.

Flow

- Flow File Repository. A key use-case for Flow Customers is simply organizing, archiving and finding a large number of flow analyses. These could be new analyses or ones performed previously. This comprises the following features.

- Define drop-points with the ability to organize experiments based on administrator-defined rules.

- Automatic import and/or indexing of FCS data from file system

- Rich search across flow files.

- Improved FlowJo integration. Display full information including graphs for imported FlowJo workspaces. Open workspaces stored in LabKey in FlowJo. Funding: CAVD, Canary?

- Improved per-run/per-well gating. Improved user interface for creating, moving and redefining gates to be used in LabKey-based analysis. Funding: ITN

- Integrate with General Purpose Assay Framework, including support for sample resolution and publish to study. Funding: CAVD

MS2

- Better integration of Protein Databases with the core functionality.

- Move to a more mature and extensible processing pipeline. This will enhance reliability, improve throughput and support inserting custom analysis tools in the pipeline.

- Integrate MS2 results with Study analysis tools.

- Enable new analysis techniques.

- Label free quantitation

- Plug in tools that read CPAS data analyze it and return results that can be stored or displayed.

- Support new scoring engines as they become available.

Improvements to General Purpose Assay Toolkit

The General Purpose Assay Tool has provided LabKey with a platform to rapidly support a variety of new assays. The following improvements are on the table.

- General purpose dilution and plate based assay support. The General Purpose Assay Toolkit and the Plate Designer are extensible, pluggable tools, but we have not yet made it easy for labs to combine them to use on any plate-based dilution assay. The goal here is to allow labs to design their own plate designs and analysis to produce a set of results appropriate to their lab.

- Easier extensibility to new assay types. While the core LabKey team will do the work to import files for In particular it should be relatively easy for a programmer to write an extension to the assay toolkit that knows how to parse laboratory specific file types. These extensions would need minimal programming to get the full other benefits of the assay toolkit.

- Better consistency and sharing of core assay types. As MS2 and Flow Cytometry assay support predates the General Purpose Assay Toolkit. These assays don’t have an integrated “publish to study” capability and have slightly different customization profiles. We would like to make all supported assays support the same basic extensibility, tagging and publishing features.

Support for specific common assays based on GPAT

We hope that GPAT allows many labs to build in their own assay data analysis tools, but there are specific assays that are widespread with our customers the LabKey core team intends to work on.

- ELISpot. ELISpot is a plate-based assay that we will provide custom support for. In particular we want to integrate plate layouts with sequence information. Support: CHAVI, CAVD.

- SoftMAX Pro. SoftMAX Pro is a popular data acquisition and analysis tool. The core LabKey team will be doing work to integrate the tool. Support: CHAVI

Improved Study Support for Data Integration and Analysis

- Study building and maintenance. The study framework relies on import of externally defined data structures. User interface for building and maintaining studies is marginal. This should be integrated in a rich user interface similar to the Vaccine study design tool. Support: CAVD, IAVI.

- Direct Data Entry For human studies we have relied on external tools to gather and. For animal studies, users do not want to enter data into an external system or spreadsheet before getting data into LabKey. LabKey will provide a data entry system Support: IAVI.

- Support for common analysis scenarios. The data analysis tools in can be applied to typical study problems, but they do not offer enough help in building common views & graphs. In particular, the system should be aware of cohorts and offer help in generating views that compare cohorts, for example charts with separate series for each cohort as well as simplified filtering & grouping by cohort.

- Cross-server data transfer and integration. We have several situations where servers area

Ease of Use

Improvements to user interfaces will allow users to make the most out of the capabilities of the LabKey server. Here are particular areas of emphasis going forward.

- Overall Navigation and UI Framework. A few standard metaphors for navigation need to be enforced throughout the product.

- Data grids should have a consistent UI and consistent customization and reporting capabilities available to them.

- Admin pages should have an integrated and consistent UI

- Support for common scenarios. Work on the user interface often stops when it is possible to perform some task rather than being easy or obvious. For example, just about all studies have the notion of cohorts, but the study structure and reporting tools don’t recognize this important concept, so building reports and graphs on the common case (cohorts) is no easier than building reports and graphs based on any other data structure.

- Reporting and analysis. LabKey incorporates a powerful query builder that allows integration. This power is obscured through inconsistent user interface and the need for scripting in R. We would like to make it easier to create standardized reports and to generalize R based reports so that they can be parameterized reused by people who do not know R.

Ease of Extensibility

Many laboratories have custom data sets and data analysis techniques that they would like to expose via the server.

- Improved web-based customization for non-programmers. The LabKey server already allows building custom schemas via the Lists feature, and custom pages that can include web parts. There are several

- Improved support for Lists including custom forms and validation for list data.

- Improved support for including web-based data in wiki pages. (Currently web-parts can be included, but they cannot be parameterized.

- Easy to build Java extensions. The current API is huge. We would like to make it easy to write a Java extension with minimal code to create and lay out pages.

- Extensions written in other languages. There is currently limited CGI support via a cgi servlet that passes some security and context information to the CGI script. This could be extended to create support for “Perl Modules” that integrate with the rest of the UI.

CFR 21 Part 11b compliance

To be used for many types of research, the LabKey server must be in full compliance of CFR 21 Part 11.

Administration

Overview

[Community Forum]

Administrative features provided by LabKey Server include:

- Project organization, using a familiar folder hierarchy

- Role-based security and user authentication

- Dynamic web site management

- Backup and maintenance tools

Documentation Topics

Set Up Your Server

Maintain Your Server

Installs and Upgrades

This section explains how to install or upgrade LabKey Server.

Topics:

Before You Install

Do I Need to Contact LabKey?

If you are interested in using LabKey Server in your laboratory, please

register with LabKey Corporation to download the free, installable files provided by LabKey Corporation. Once you have a user account, you can install LabKey Server on your local computer. Since LabKey Server is an open source project, its source code is freely available for anyone to compile (see "

Enlisting in the Version Control Project" and "

Source Code").

Install Manually or Use the Installer?

You can run LabKey on computers running Microsoft Windows or most Unix variants, including Linux, McIntosh, and Solaris. If you are running on Windows and your installation needs are simple, you can run our binary installer, which will walk you through the installation process, put all files where they need to go, and configure LabKey for you. See the help topic on

Install LabKey via Installer.

If your installation needs are more complex, you can install LabKey manually using our step-by-step instructions. To install LabKey manually, see Install LabKey Manually.

How Do I Upgrade?

To upgrade LabKey, see

Upgrade LabKey.

What Happens When I Install LabKey?

When you install LabKey, the following components are installed on your computer:

- The Apache Tomcat web server, version 5.5.20

- The PostgreSQL database server, version 8.3 (unless you install manually and choose to run LabKey against Microsoft SQL Server instead)

- The Java Runtime Environment (JRE), version 1.6.0-10

- The LabKey web application components

- Additional third-party components, installed to the /bin directory of your LabKey installation.

When you install LabKey, your computer becomes a web server. This means that if your computer is publicly visible on the internet, or on an intranet, other users will be able to view your LabKey home page. The default security settings for LabKey ensure that no other pages in your LabKey installation will be visible to users unless you specify that they should be. It's a good idea to familiarize yourself with the LabKey security model before you begin adding data and information to LabKey, so that you understand how to specify which users will be able to view it or modify it. For more information on securing LabKey, see

Security and Accounts.

Troubleshooting:

- The LabKey installer attempts to install PostgreSQL on your computer. You can only install one instance of PostgreSQL on your computer at a time. If you already have PostgreSQL installed, LabKey can use your installed instance; however, you will need to install LabKey manually. See Install LabKey Manually for more information.

- You may need to disable your antivirus or firewall software before running the LabKey installer, as the PostgreSQL installer conflicts with some antivirus or firewall software programs. (see http://pginstaller.projects.postgresql.org/faq/FAQ_windows.html for more information).

- On Windows you may need to remove references to Cygwin from your Windows system path before installing LabKey, due to conflicts with the PostgreSQL installer (see http://pginstaller.projects.postgresql.org/faq/FAQ_windows.html for more information).

- If you uninstall and reinstall LabKey, you may need to manually delete the PostgreSQL data directory in order to reinstall.

What System Resources are Required for Running LabKey?

LabKey is a web application that runs on Tomcat and accesses a PostgreSQL or Microsoft SQL Server database server. The resource requirements for the web application itself are minimal, but the computer on which you install LabKey must have sufficient resources to run Tomcat and the database server (unless you are connecting to a remote database server, which is also an option). The performance of your LabKey system will depend on the load placed on the system, but in general a modern server-level system running Windows or a Unix-based operating system should be sufficient.

We recommend the following resources as the minimum for running LabKey:

- Processor: a high-performing processer such as a Pentium 4, or, preferably, a dual-processor machine.

- Physical memory: at least 1 gigabyte RAM, preferably 2 GB.

- Disk space: 1 gigabyte hard drive space free

Note: An active LabKey system that searches, stores, and analyzes a large quantity of results and proteins may require significantly more resources. For example, the LabKey system at Fred Hutchinson Cancer Research Center uses a hierachical network file store for archiving raw data and processed data, a 100-CPU cluster for MS/MS searching, a database server using a three terabyte disk array for storing and querying results, and a separate web server running LabKey itself.

Install LabKey via Installer

These instructions explain how to use the LabKey binary installer for Windows. If you prefer to install LabKey manually on Windows or you are installing on a non-Windows machine, see the

Install LabKey Manually help topic.

LabKey is supported on computers running Windows XP or later, with up-to-date service packs. LabKey may run on other versions of Windows as well, but only these versions are supported.

To install LabKey on a PC computer running Windows, you can download and run the LabKey installer, available from LabKey Corporation for free download after free registration. You can choose between one of two installers, depending on whether you have an existing installation of the Java Runtime Environment (JRE) on your computer. For more information on what components are installed on your computer with LabKey, see Before You Install.

When you run the installer, you will be prompted to choose between express and advanced installation. If you are installing LabKey on your local computer to try it out, the express installation, which installs the minimum features required for LabKey to work, may be sufficient for you. If you are installing LabKey for your organization to use, you'll want to perform an advanced installation, or install LabKey manually.

Express Installation

If you choose the Express installation option, the Windows installer will prompt you to take the following steps, in addition to standard software installation configuration options:

- Indicate that you understand that when you install LabKey, your computer becomes a web server and a database server.

- Provide connection information for an outgoing (SMTP) mail server. The mail server is used to send email generated by the LabKey system, including email sent to new users when they are given accounts on LabKey. The installer will prompt you to specify an SMTP host, port number, user name, and password, and an address from which automated emails are sent. Note that if you are running Windows and you don't have an SMTP server available, you can set one up on your local computer. For more information, see the SMTP Settings section in Modify the Configuration File.

- Provide a user name and password for the database superuser for PostgreSQL, the database server which is installed by the installer. In PostgreSQL, a superuser is a user who is allowed all rights, in all databases, including the right to create users. You can provide the account information for an existing superuser, or create a new one. You may want to write down the user name and password you provide. This password is the first of the three discrete types of passwords used on LabKey Server

- Provide a user name and password for the Windows service user. LabKey is installed as a Windows service, and must run under a unique Windows user account; you cannot specify an existing user account. This password is the second of the three discrete types of passwords used on LabKey Server.

Advanced Installation

If you choose the Advanced installation option, you'll be prompted to set up a connection to an outgoing (SMTP) mail server, as described above for the Express Installation.

You'll also be prompted to specify information for mapping a network drive in the case that LabKey needs to access files on a remote server. Specify a drive letter, the UNC path to the remote server, and a user name and password for accessing that share; these can be left blank if no user name or password is required.

Finally, if your organization has an LDAP server, you can optionally specify that LabKey should connect to the LDAP server for authenticating users. If you specify that LabKey should use the LDAP server, then any user listed by the LDAP server can log onto LabKey with the same user name and password that is managed by the LDAP server. By default any user specified by LDAP is a member of the Users group on the LabKey system, and has the same permissions as other members of the Users group.

Setting Up Your Account

At the end of the installation process, the LabKey installer will automatically launch your default web browser and launch LabKey if you have left checked the default option Open Browser to LabKey Home Page. Otherwise, open your web browser and navigate to http://localhost:8080/labkey.

Once you launch LabKey, you'll be prompted to set up an account by entering your email address and a password. This password is the third of the three discrete types of passwords used on LabKey Server. When you enter your name and password, you are added to the global administrators group for this LabKey installation. For more information on the role of the global (a.k.a. site) administrator, see Site Administrator.

You'll then be prompted to install the LabKey modules. For most users, the Express Install is recommended. LabKey will install all modules and then give you the choice of viewing the home page, or further customizing the installation by setting properties for the LabKey application. For more information on this option, see Site Settings.

The Advanced Install is for users who want to selectively upgrade modules and may be confusing unless you are familiar with the underlying architecture of the LabKey system. If you click the Advanced Install button and find yourself confronted by a confusing array of options, you can successfully finish the LabKey installation by clicking the Run Recommended Scripts and Finish button for each page displayed until the installation is complete.

Customize the Installation

After you've installed LabKey, you'll be prompted to customize your installation. See Site Settings for more information.

Installer Troubleshooting

Note that the LabKey installer installs PostgreSQL on your computer. You can only have one PostgreSQL installation on your computer at a time, so if you have an existing installation, the LabKey installer will fail. Try uninstalling PostgreSQL, or perform a manual installation of LabKey instead. See Install LabKey Manually for more information.

Before you install LabKey, you should shut down all other running applications. If you have problems during the installation, try additionally shutting down any virus scanning application, internet security applications, or other applications that run in the background.

On Windows you may need to remove references to Cygwin from your Windows system path before installing LabKey, due to conflicts with the PostgreSQL installer (see http://pginstaller.projects.postgresql.org/faq/FAQ_windows.html for more information).

Securing the LabKey Configuration File

Important: The LabKey configuration file contains user name and password information for your database server, mail server, and network share. For this reason you should secure this file within the file system, so that only designated network administrators can view or change this file. For more information on this file, see Modify the Configuration File.

Install LabKey Manually

If you are installing LabKey Server for evaluation purposes, we recommend that you use the graphical Windows installer. The Windows installer is faster, easier, and less prone to errors than installing on Unix or manually installing on Windows. Installing manually requires moderate network and database administration skills.

Reasons to install LabKey Server manually include:

- You're installing LabKey Server on a Linux- or Unix-based computer or a Macintosh.

- You're installing LabKey Server in a production environment and you want fine-grained control over file locations.

- You have an existing PostgreSQL installation on your Windows computer. Only one instance of PostgresSQL can be installed per computer, so the Windows installer will fail if there is an existing PostgreSQL installation.

- You have an existing Tomcat installation on your Windows computer and you want LabKey Server to use it, rather than installing a new instance. Note that Tomcat can be installed multiple times on the same machine.

LabKey Server is a Java web application that runs under Apache Tomcat and accesses a relational database. Currently LabKey Server works with both PostgreSQL and Microsoft SQL Server. Note that you only need to install one or the other, not both.

LabKey Server can also reserve a network file share for the data pipeline, and use an outgoing (SMTP) mail server for sending system emails. LabKey Server may optionally connect to an LDAP server to authenticate users within an organization.

If you are manually installing LabKey Server, you need to download, install, and configure all of its components yourself. The following topics explain how to do this in a step-by-step fashion. If you are installing manually on Unix, Linux, or Macintosh, the instructions assume that you have super-user access to the machine, and that you are familiar with unix commands and utilities such as wget, tar, chmod, and ln.

If you are upgrading LabKey Server from CPAS 1.3 or later on Windows, you can use the Windows installer to perform the upgrade. To upgrade LabKey Server manually, see the

manual upgrade instructions.

Install Required Components

If you are manually installing or upgrading LabKey Server, you'll need to install the correct versions of all of the required components. This topic details how and where to install these components.

Before you begin, register with LabKey Corporation if you haven't done so already such that you can download the installable LabKey Server files provided by LabKey Corporation. Note that you'll still need to download the third-party components required by LabKey Server separately, as described below.

Before installing these components, think about where you want them to reside in the file system. For example, you may want to create a LabKey Server folder at the root level and install all components there, or on unix systems, you may want to install them to /usr/local/labkey or some similar place.

Note: The only restriction on where you can install LabKey Server components is that you cannot put the LabKey Server web application files beneath the <tomcat-home>/webapps directory.

Note: We provide support only for the versions listed for each component, and so we strongly recommend that you install that version. These are the versions that have proven themselves over many months of testing and deployment. Some of these components may have more recent releases, but we have not tested or configured the system to work with them.

Install the Java Runtime Environment

- Download the Java Runtime Environment (JRE) 1.6 from http://java.sun.com/javase/downloads/index.jsp.

- Install the JRE to the chosen directory. On Windows the default installation directory is C:\Program Files\Java. On Linux a common place to install the JRE is /usr/local/jre<version>. We suggest creating a symbolic link from /usr/local/java to /usr/local/jre<version>. This will make upgrading the JRE easier in the future.

Notes:

- The JDK includes the JRE, so if you have already installed the JDK, you don't need to also install the JRE.

- If you are planning on building the LabKey Server source code, you should install the JDK 1.6 and configure JAVA_HOME to point to the JDK. For more information, see Building the Source Code.

- If you are installing LabKey on a Mac, you do not need to install the JRE. The JRE comes with the operating system. You should check to make sure that the JRE version included with the OS is a sufficiently recent version of the JRE. For example, Tiger 10.4.10 comes with the JRE 1.5, which is fine.

Install the Apache Tomcat Web Server, Version 5.5.x

LabKey Server supports Tomcat versions 5.5.9 through 5.5.25 and version 5.5.27. Tomcat 5.5.27 is the recommended version of Tomcat for LabKey Server 9.1. For details on supported Tomcat versions, see Supported Tomcat Versions.

- Download Tomcat 5.5.x from http://tomcat.apache.org/download-55.cgi. Note that this link leads you to the most recent version of Tomcat. For version 5.5.27, see http://tomcat.apache.org/download-55.cgi#5.5.27.

- Install Tomcat. On Linux, install to /usr/local/apache-tomcat<version>, then create a symbolic link from /usr/local/tomcat to /usr/local/apache-tomcat<version>. We will call this directory <tomcat-home>.