This topic covers some tips and tricks for successfully importing data to LabKey Sample Manager. These guidelines and limitations apply to uploading files, data describing samples and sources, and assay data.

Use Import Templates

For the most reliable method of importing data, first obtain a template for the data you are importing. You can then ensure that your data conforms to expectations before using either

Add > Import from File or

Edit > Update from File.

For

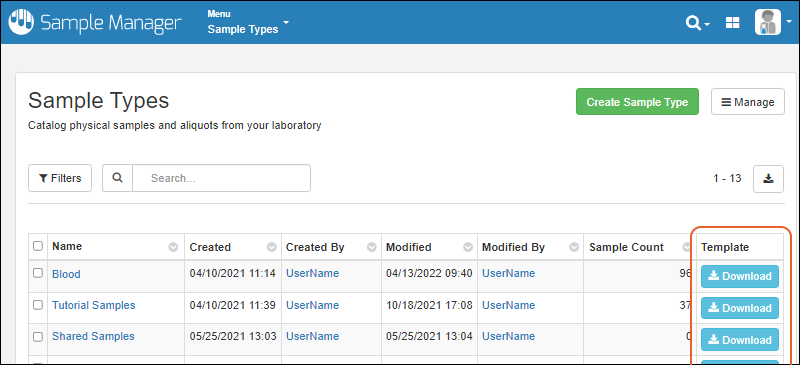

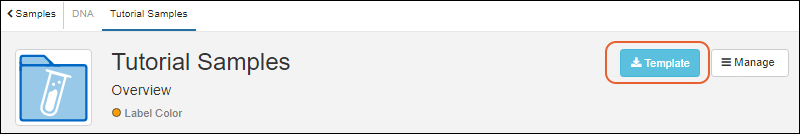

Source Types, Sample Types, and Assay Results, click the category from the main menu. You'll see a

Template button for each data structure defined.

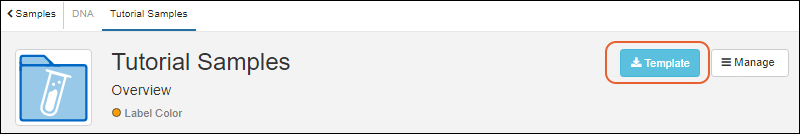

You can also find the download template button on the overview page for each Sample Type, Source Type, Assay for downloading the template for that structure:

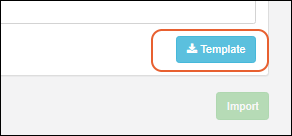

In case you did not already obtain a template, you can also download one from within the file import interface itself:

Use the downloaded template as a basis for your import file. It will include all possible columns and will exclude unnecessary ones. You may not need to populate every column of the template when you import data.

- For a Sample Type, if you have defined Parent or Source aliases, all the possible columns will be included in the template, but only the ones you are using need to be included.

- In cases of columns that cannot be edited directly (such as the Storage Status of a sample, which is defined by a sample having a location and not being checked out), these columns will be omitted from the template.

- Note that the template for assay designs includes the results columns, but not the run or batch ones.

Background Import (Asynchronous Import)

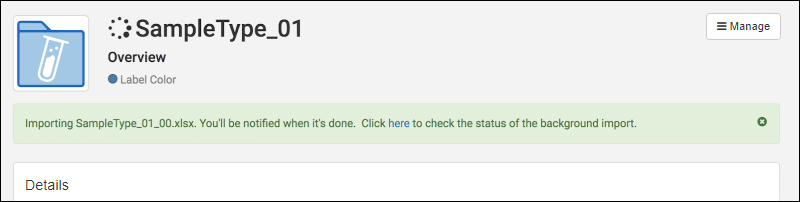

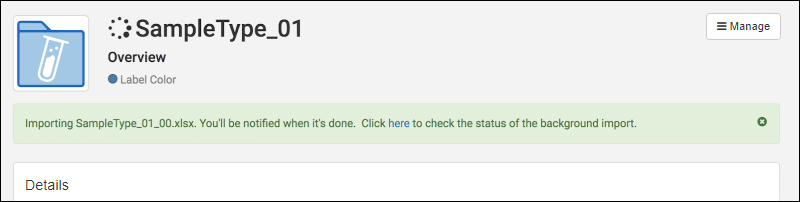

When import by file is large enough that it will take considerable time to complete, the import will automatically be done in the background. Files larger than 100kb will be imported asynchronously. This allows users to continue working within the app while the import completes.

Import larger files as usual. You will see a banner message indicating the background import in progress, and a

icon alongside that sample type until it completes:

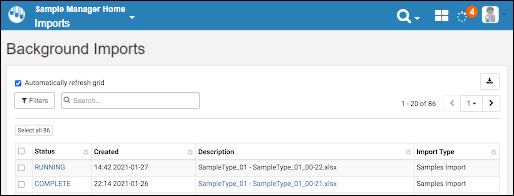

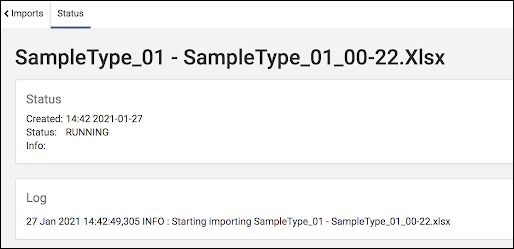

Any user in the application will see the spinner in the header bar. To see the status of all asynchronous imports in progress, choose

Background Imports from the user menu.

Click a row for a page of details about that particular import, including a continuously updating log.

When the import is complete, you will receive an

in-app notification via the menu.

Import Performance Considerations

Excel files containing formulas will take longer to upload than files without formulas.

The performance of importing data into any structure is related to the number of columns. If your sample type or assay design has more than 30 columns, you may encounter performance issues.

Batch Delete Limitations

You can only delete 10,000 rows at a time. To delete larger sets of sample or assay data, select batches of rows to delete.

Column Headers

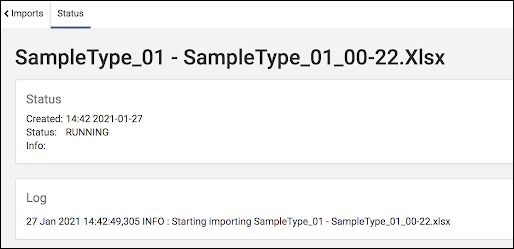

Data column headers should not include spaces or special characters like '///' slashes. Instead of spaces or special characters, try renaming data columns to use CamelCasing or '_' underscores as word separators. Displayed column headers will parse the internal caps and underscores to show spaces in the column names.

Once you've created a sample type or assay data structure following these guidelines, you can

change the Label for the field (under Name and Linking Options in the field editor)) if desired. For example, if you want to show a column with units included, you could import the data with a column name of

Platelets and then set the label to show "Platelets (per uL)" to the user.

You can also use

Import Aliases to map a column name that contains spaces to a sample type or assay field that does not. Remember to use "double quotes" around names that include spaces.

For example, if your assay data includes a column named "Platelets (per uL)", you would define your assay with a field named "Platelets" and include "Platelets (per uL)" (including the quotes) in the

Import Aliases box of the assay design definition.

Data Preview Considerations

Previewing data stored as a TSV or CSV file may be faster than previewing data imported as an Excel file, particularly when file sizes are large.

Previewing Excel files that include formulas will take longer to preview than similar Excel files without formulas.

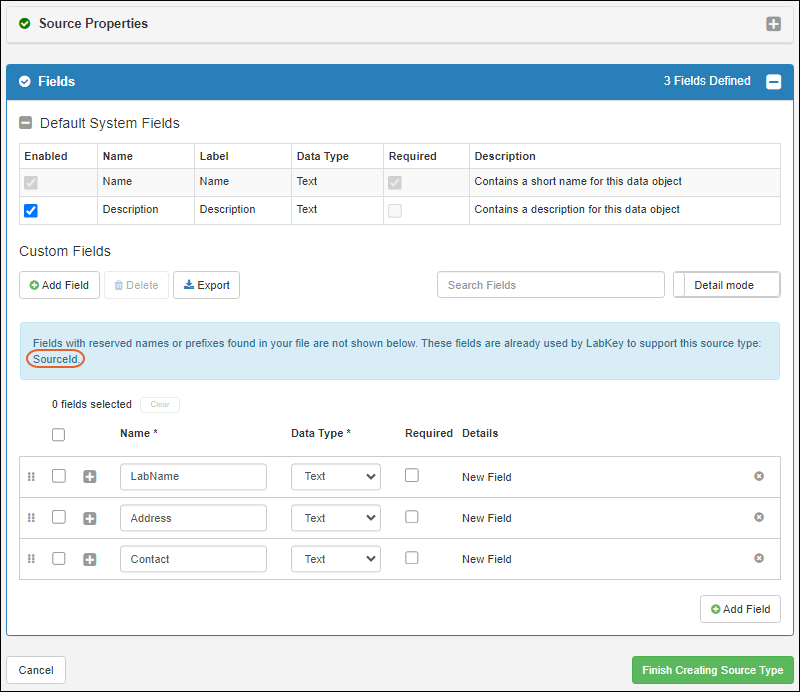

Reserved Fields

There are a number of reserved field names used within the system for

every data structure that will be populated internally when data is created or modified, or are otherwise reserved and cannot be redefined by the user:

- Created

- CreatedBy

- Modified

- ModifiedBy

- Name

- RowId

- LSID

- Folder

- Properties

In addition

Sample and Source Types reserve these field names:

- Description

- SampleId & SourceId

- SampleState (surfaced as "Status" in the application)

- MaterialExpDate (surfaced as "Expiration Date" in the application)

- Flag

- SourceProtocolApplication

- SourceApplicationInput

- RunApplication

- RunApplicationOutput

- Protocol

- Alias

- SampleSet & DataClass

- Run

- genId

- Inputs

- Outputs

- SampleCount

- StoredAmount

- SampleTypeUnits

- Units

- Freeze/Thaw Count

- StorageStatus

- StorageLocation

- StorageRow

- StorageCol

- CheckedOutBy

- CheckedOut (Date)

- IsAliquot

- AliquotCount (surfaced as Aliquots Created Count in the application)

- AliquotTotalVolume

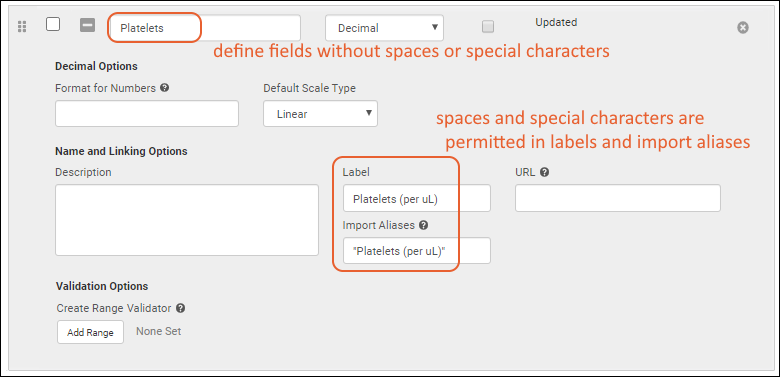

Inferral of Reserved Fields

If you infer a data structure from a file, and it contains any reserved fields, they will not be shown in the inferral but will be created for you. You will see a banner informing you that this has occurred:

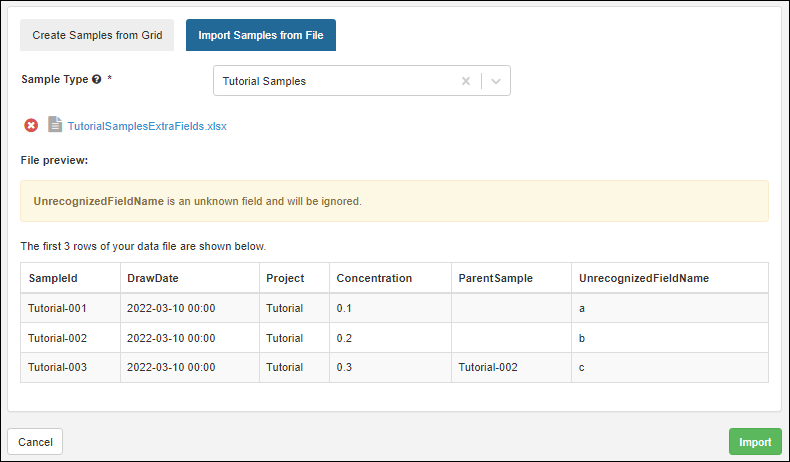

Import to Unrecognized Fields

If you import data that contains fields unrecognized by the system for that data structure (sample type, source type, or assay design), you will see a banner warning you that the field will be ignored:

If you expected the field to be recognized, you may need to check spelling or data type to make sure the data structure and import file match.

Related Topics

In case you did not already obtain a template, you can also download one from within the file import interface itself:

In case you did not already obtain a template, you can also download one from within the file import interface itself: Any user in the application will see the spinner in the header bar. To see the status of all asynchronous imports in progress, choose Background Imports from the user menu.

Any user in the application will see the spinner in the header bar. To see the status of all asynchronous imports in progress, choose Background Imports from the user menu. When the import is complete, you will receive an in-app notification via the menu.

When the import is complete, you will receive an in-app notification via the menu.