Natural Language Processing (NLP) techniques are useful for bringing free text source information into tabular form for analysis. The

LabKey NLP workflow supports abstraction and annotation from files produced by an NLP engine or other process. This topic outlines how to upload documents directly to a workspace.

Integrated NLP Engine (Optional)

Another upload option is to configure and use a Natural Language Processing (NLP) pipeline with an integrated NLP engine. Different NLP engines and preprocessors have different requirements and output in different formats. This topic can help you correctly configure one to suit your appplication.

Set Up a Workspace

The default NLP folder contains web parts for the

Data Pipeline, NLP Job Runs, and NLP Reports. To return to this main page at any time, click the

Folder Name link near the top of the page.

Upload Documents Directly

Documents for abstraction, annotation, and curation can be directly uploaded. Typically the following formats are provided:

- A TXT report file and a JSON results file. Each named for the document, one with with a .txt extension and one with a .nlp.json extension.

- This pair of files is uploaded to LabKey as a directory or zip file.

- A single directory or zip file can contain multiple pairs of files representing multiple documents, each with a unique report name and corresponding json file.

For more about the JSON format used, see

Metadata JSON Files.

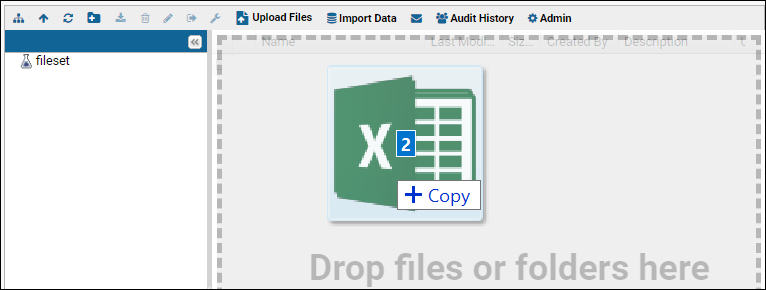

- Upload the directory or zip file containing the document including .nlp.json files.

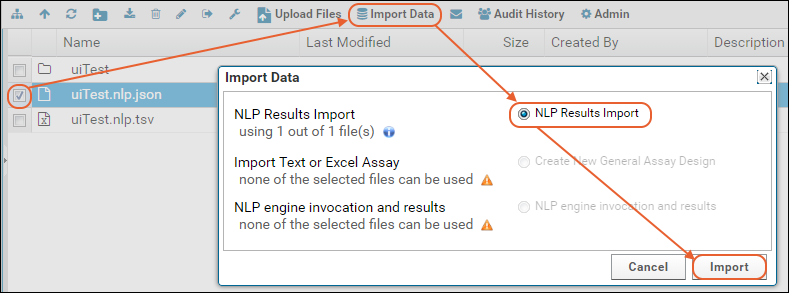

- Select the JSON file(s) and click Import Data.

- Choose the option NLP Results Import.

Review the

uploaded results and proceed to task assignment.

Integrated NLP Engine

Configure a Natural Language Processing (NLP) Pipeline

An alternate method of integration with an NLP engine can encompass additional functionality during the upload process, provided in a pipeline configuration.

Setup the Data Pipeline

- Return to the main page of the folder.

- In the Data Pipeline web part, click Setup.

- Select Set a pipeline override.

- Enter the primary directory where the files you want to process are located.

- Set searchability and permissions appropriately.

- Click Save.

- Click NLP Dashboard.

Configure Options

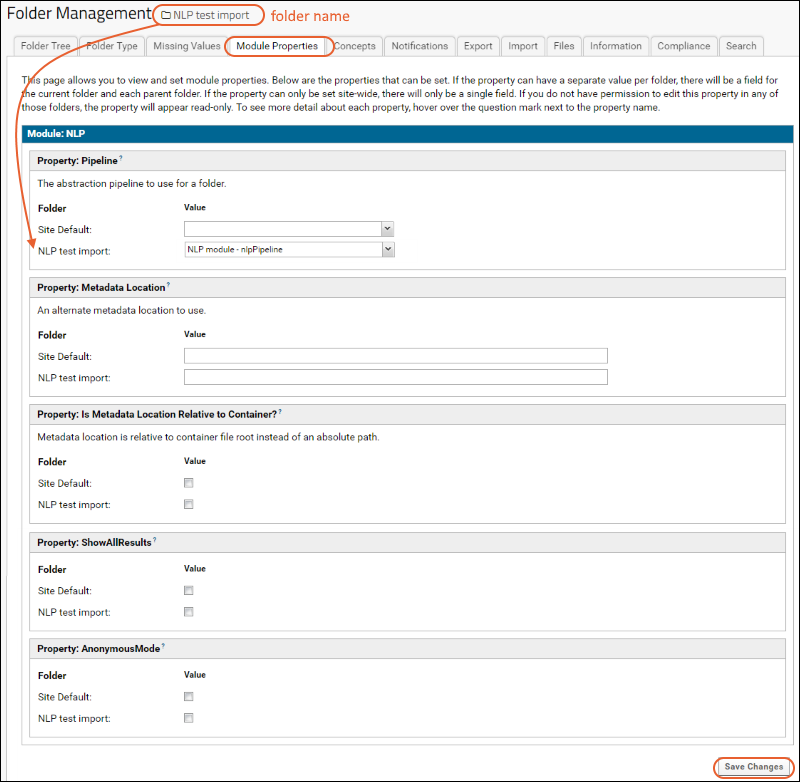

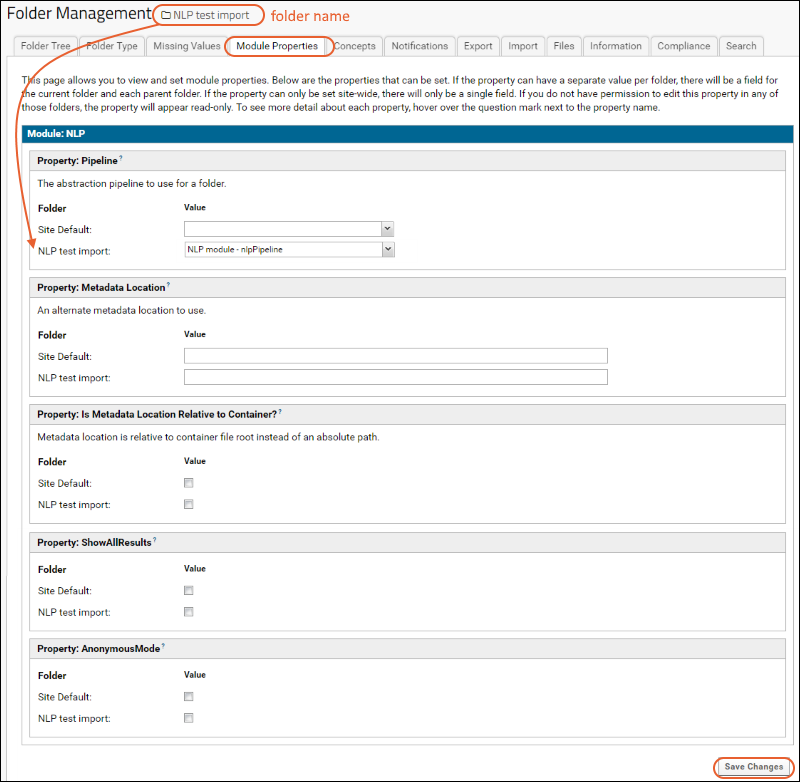

Set Module Properties

Multiple abstraction pipelines may be available on a given server. Using

module properties, the administrator can select a specific abstraction pipeline and specific set of metadata to use in each container.

These module properties can all vary per container, so for each, you will see a list of folders, starting with "Site Default" and ending with your current project or folder. All parent containers will be listed, and if values are configured, they will be displayed. Any in which you do not have permission to edit these properties will be grayed out.

- Navigate to the container (project or folder) you are setting up.

- Select (Admin) > Folder > Management and click the Module Properties tab.

- Under Property: Pipeline, next to your folder name, select the appropriate value for the abstraction pipeline to use in that container.

- Under Property: Metadata Location enter an alternate metadata location to use for the folder if needed.

- Check the box marked "Is Metadata Location Relative to Container?" if you've provided a relative path above instead of an absolute one.

Alternate Metadata Location

The

metadata is specified in a .json file, named in this example "metadata.json" though other names can be used.

- Upload the "metadata.json" file to the Files web part.

- Select (Admin) > Folder > Management and click the Module Properties tab.

- Under Property: Metadata Location, enter the file name in one of these ways:

- "metadata.json": The file is located in the root of the Files web part in the current container.

- "subfolder-path/metadata.json": The file is in a subfolder of the Files web part in the current container (relative path)

- "full path to metadata.json": Use the full path if the file has not been uploaded to the current project.

- If the metadata file is in the files web part of the current container (or a subfolder within it), check the box for the folder name under Property: Is Metadata Location Relative to Container.

Define Pipeline Protocol(s)

When you import a TSV file, you will select a

Protocol which may include one or more overrides of default parameters to the NLP engine.

If there are multiple NLP engines available, you can include the NLP version to use as a parameter. With version-specific protocols defined, you then simply select the desired protocol during file import. You may define a new protocol on the fly during any tsv file import, or you may find it simpler to predefine one or more. To quickly do so, you can import a small stub file, such as the one attached to this page.

- Download this file: stub.nlp.tsv and place in the location of your choice.

- In the Data Pipeline web part, click Process and Import Data.

- Drag and drop the stub.nlp.tsv file into the upload window.

For each protocol you want to define:

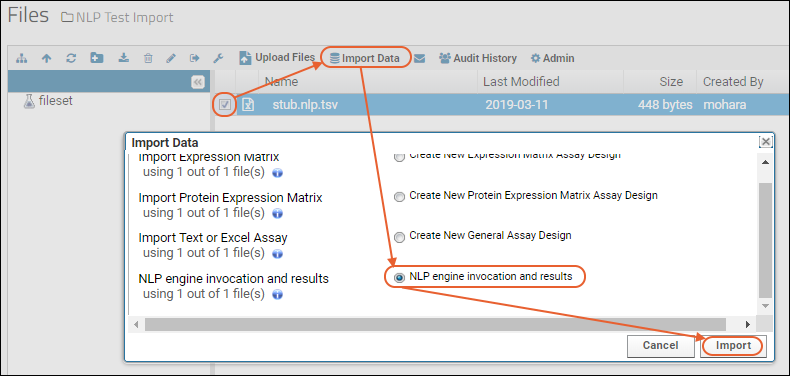

- In the Data Pipeline web part, click Process and Import Data.

- Select the stub.nlp.tsv file and click Import Data.

- Select "NLP engine invocation and results" and click Import.

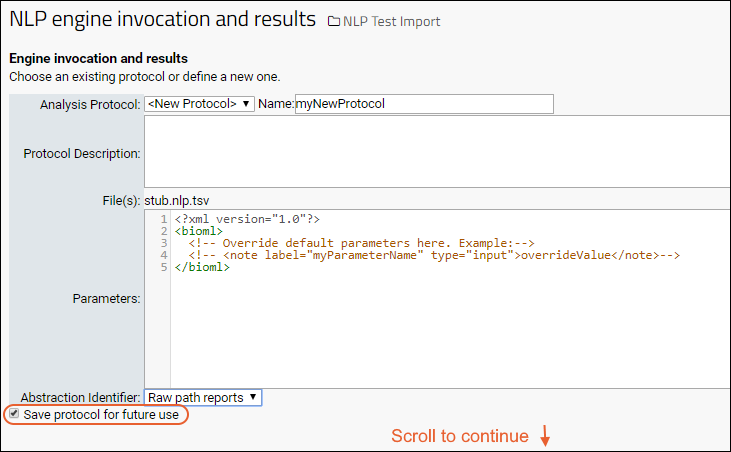

- From the Analysis Protocol dropdown, select "<New Protocol>". If there are no other protocols defined, this will be the only option.

- Enter a name and description for this protocol. A name is required if you plan to save the protocol for future use. Using the version number in the name can help you easily differentiate protocols later.

- Add a new line to the Parameters section for any parameters required, such as giving a location for an alternate metadata file or specifying the subdirectory that contains the intended NLP engine version. For example, if the subdirectories are named "engineVersion1" and "engineVersion2", you would uncomment the example line shown and use:

<note label="version" type="input">engineVersion1</note>

- Select an Abstraction Identifier from the dropdown if you want every document processed with this protocol to be assigned the same identifier. "[NONE]" is the default.

- Confirm "Save protocol for future use" is checked.

- Scroll to the next section before proceeding.

Define Document Processing Configuration(s)

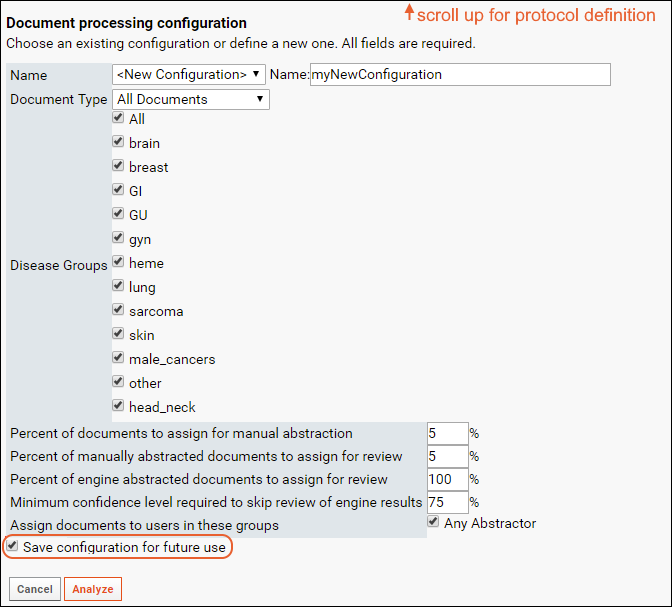

Document processing configurations control how assignment of abstraction and review tasks is done automatically. One or more configurations can be defined enabling you to easily select among different settings or criteria using the name of the configuration. In the lower portion of the import page, you will find the

Document processing configuration section:

- From the Name dropdown, select the name of the processing configuration you want to use.

- The definition will be shown in the UI so you can confirm this is correct.

- If you need to make changes, or want to define a new configuration, select "<New Configuration>".

- Enter a unique name for the new configuration.

- Select the type of document and disease groups to apply this configuration to. Note that if other types of report are uploaded for assignment at the same time, they will not be assigned.

- Enter the percentages for assignment to review and abstraction.

- Select the group(s) of users to which to make assignments. Eligible project groups are shown with checkboxes.

- Confirm "Save configuration for future use" is checked to make this configuration available for selection by name during future imports.

- Complete the import by clicking Analyze.

- In the Data Pipeline web part, click Process and Import Data.

- Upload the "stub.nlp.tsv" file again and repeat the import. This time you will see the new protocol and configuration you defined available for selection from their respective dropdowns.

- Repeat these two steps to define all the protocols and document processing configurations you need.

For more information, see

Pipeline Protocols.

Run Data Through the NLP Pipeline

First upload your TSV files to the pipeline.

- In the Data Pipeline web part, click Process and Import Data.

- Drag and drop files or directories you want to process into the window to upload them.

Once the files are uploaded, you can iteratively run each through the NLP engine as follows:

- In the Data Pipeline web part, click Process and Import Data.

- Navigate uploaded directories if necessary to find the files of interest.

- Check the box for a tsv file of interest and click Import Data.

- Select "NLP engine invocation and results" and click Import.

- Choose an existing Analysis Protocol or define a new one.

- Choose an existing Document Processing Configuration or define a new one.

- Click Analyze.

- While the engine is running, the pipeline web part will show a job in progress. When it completes, the pipeline job will disappear from the web part.

- Refresh your browser window to show the new results in the NLP Job Runs web part.

View and Download Results

Once the NLP pipeline import is successful, the input and intermediate output files are both deleted from the filesystem.

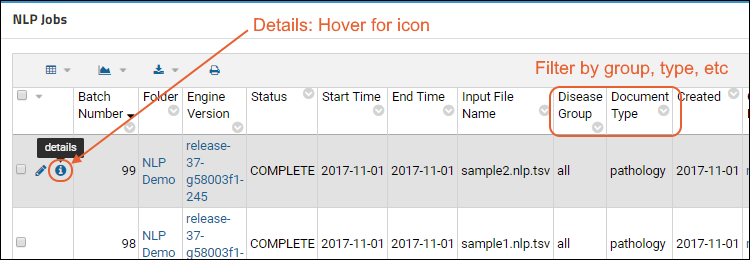

The

NLP Job Runs lists the completed run, along with information like the document type and any identifier assigned for easy filtering or reporting. Hover over any run to reveal a

(Details) link in the leftmost column. Click it to see both the input file and how the run was interpreted into tabular data.

During import, new line characters (CRLF, LFCR, CR, and LF)are all normalized to LF to simplify highlighting text when abstracting information.

Note: The results may be reviewed for accuracy. In particular, the disease group determination is used to guide other values abstracted. If a reviewer notices an incorrect designation, they can edit, manually update it and send the document for reprocessing through the NLP information with the correct designation.

Download Results

To download the results, select

(Export/Sign Data) above the grid and choose the desired format.

Rerun

To rerun the same file with a different version of the engine, simply repeat the original import process, but this time choose a different protocol (or define a new one) to point to a different engine version.

Error Reporting

During processing of files through the NLP pipeline, some errors which occur require human reconcilation before processing can proceed. The pipeline log is available with a report of any errors that were detected during processing, including:

- Mismatches between field metadata and the field list. To ignore these mismatches during upload, set "validateResultFields" to false and rerun.

- Errors or excessive delays while the transform phase is checking to see if work is available. These errors can indicate problems in the job queue that should be addressed.

Add a

Data Transform Jobs web part to see the latest error in the

Transform Run Log column.

For more information about data transform error handling and logging, see

ETL: Logs and Error Handling.

Related Topics